Deliver Real-Time Insights and Fresh Data with dbt and Striim on Snowflake Partner Connect

Use Striim to stream data from PostgreSQL to Snowflake and coordinate transform jobs in dbt

Benefits

Manage Scalable Applications

Integrate Striim with dbt to transform and monitor real time data SLAs

Capture Data Updates in real time

Use Striim’s postgrescdc reader for real time data updates

Overview

Striim is a unified data streaming and integration product that offers change capture (CDC) enabling continuous replication from popular databases such as Oracle, SQLServer, PostgreSQL and many others to target data warehouses like BigQuery and Snowflake.

dbt Cloud is a hosted service that helps data analysts and engineers productionize dbt deployments. It is a popular technique among analysts and engineers to transform data into usable formats and also ensuring if source data freshness is meeting the SLAs defined for the project. Striim collaborates with dbt for effective monitoring and transformation of the in-flight data. For example, if the expectation is that data should be flowing every minute based on timestamps, then dbt will check that property and make sure the time between last check and latest check is only 1 minute apart.

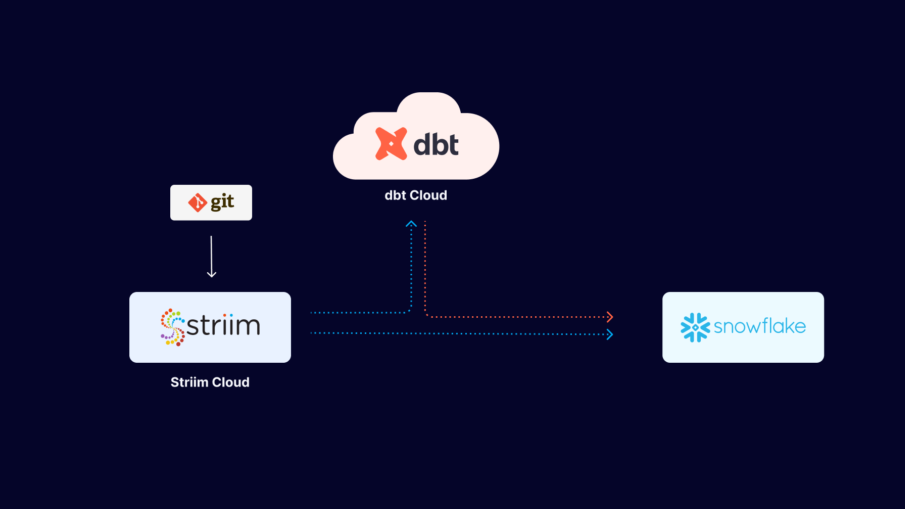

In this recipe, we have shown how Striim and dbt cloud can be launched from Snowflake’s Partner Connect to perform transformation jobs and ensure data freshness with Snowflake data warehouse as the target.

Core Striim Components

PostgreSQL CDC: PostgreSQL Reader uses the wal2json plugin to read PostgreSQL change data. 1.x releases of wal2jon can not read transactions larger than 1 GB.

Stream: A stream passes one component’s output to one or more other components. For example, a simple flow that only writes to a file might have this sequence

Snowflake Writer: Striim’s Snowflake Writer writes to one or more existing tables in Snowflake. Events are staged to local storage, Azure Storage, or AWS S3, then written to Snowflake as per the Upload Policy setting.

Benefits of DBT Integration with Striim for Snowflake

Striim and dbt work like magic with Snowflake to provide a simple, near real-time cloud data integration and modeling service for Snowflake. Using Striim, dbt, and Snowflake a powerful integrated data streaming system for real-time analytics that ensures fresh data

SLAs across your company.

Striim is unified data streaming and integration product that can ingest data from various sources including change data from databases (Oracle, PostgreSQL, SQLServer, MySQL and others), and rapidly deliver it to your cloud systems such as Snowflake. Data loses it’s much of its value over time and to make the most out of it, real-time analytics is the modern solution. With dbt, datasets can be transformed and monitored within the data warehouse. Striim streams real-time data into the target warehouse where analysts can leverage dbt to build models and transformations. Coordinating data freshness validation between Striim and dbt is a resilient method to ensure service level agreements. Companies can leverage Striim integration with dbt in production to make real-time data transformation fast and reliable.

We have demonstrated how to build a simple python script that pings the Striim API to fetch metadata from the Striim source (getOperationCounts()) that records the number of DDLs, deletes, inserts and updates. This data can be used by dbt to monitor freshness, schedule or pause dbt jobs. For example, run dbt when n inserts occur on the source table or Striim CDC is in-sync. The schematic below shows the workflow of dbt cloud integration with striim server. Users can configure DBT scheduling within Striim via dbt cloud API calls. dbt integration with Striim enhances the user’s analytics pipeline after Striim has moved data in real-time.

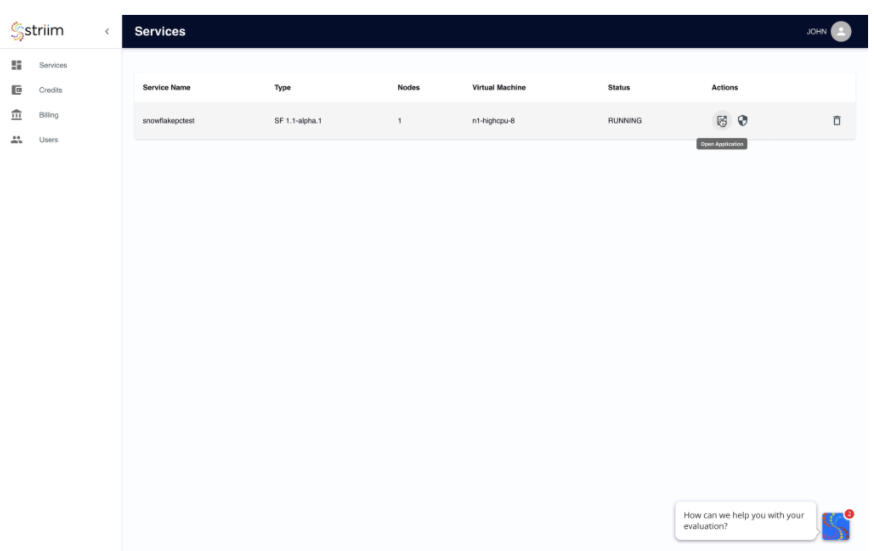

Step 1: Launch Striim Cloud from Snowflake Partner Connect

Follow the steps below to connect Striim server to postgres instance containing the source database:

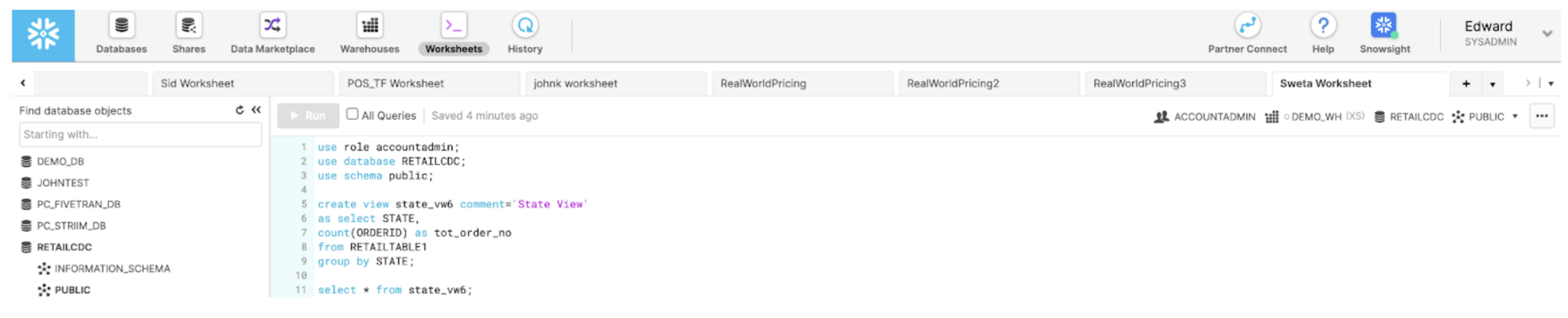

Launch Striim in Snowflake Partner Connect by clicking on “Partner Connect” in the top right corner of the navigation bar.

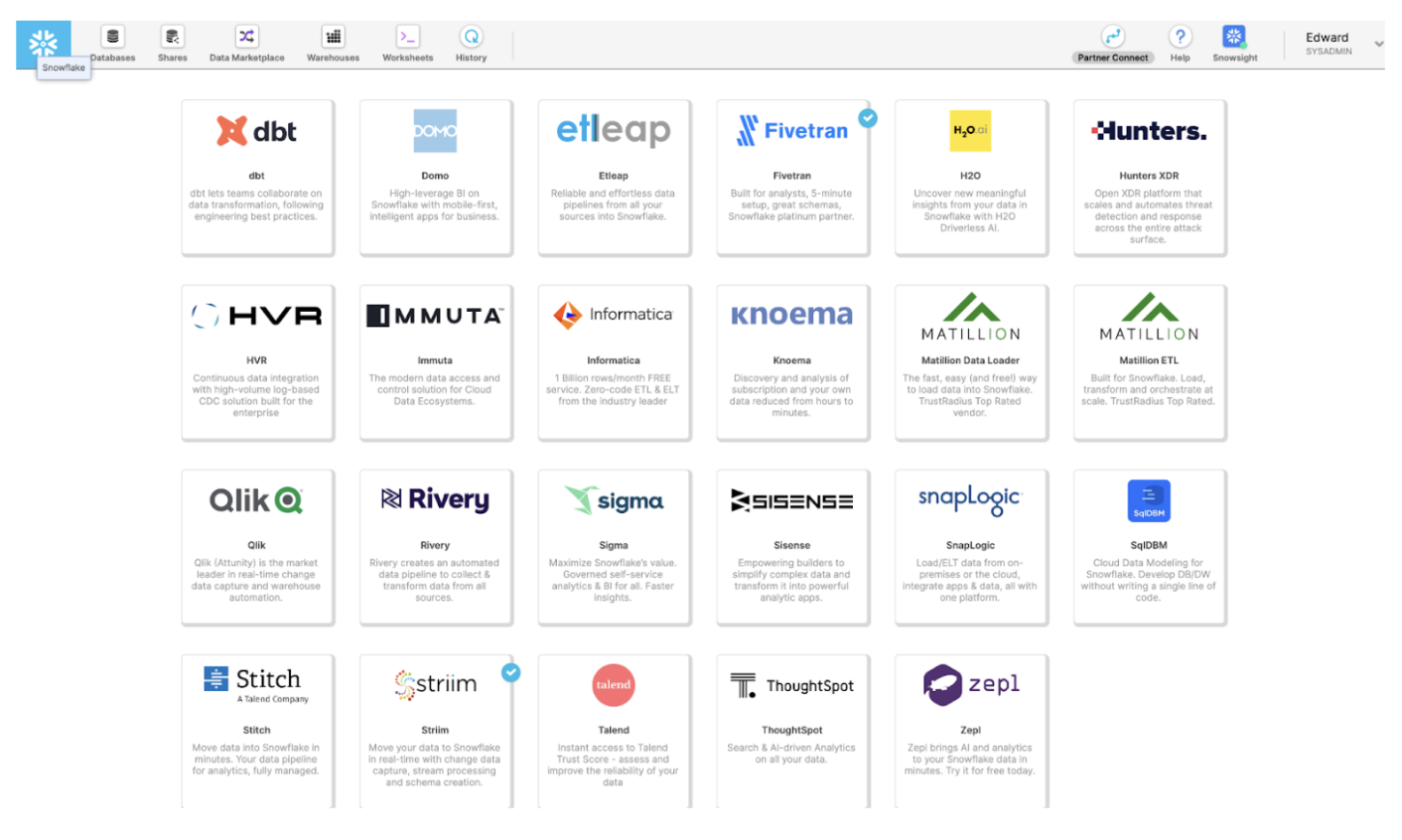

In the next window, you can launch Striim and sign up for a free trial.

Create your first Striim Service to move data to Snowflake.

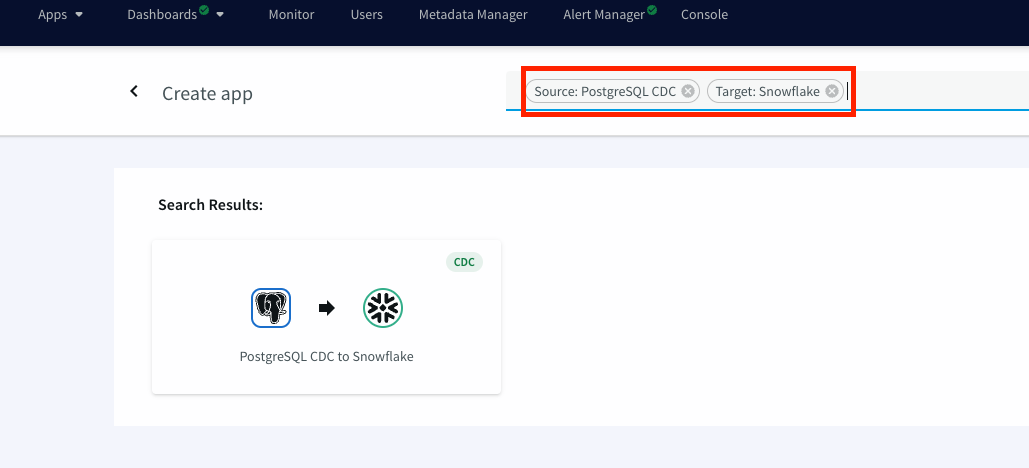

Launch the new service and use app wizard to stream data from PostgresCDC to Snowflake and Select Source and Target under create app from wizard:

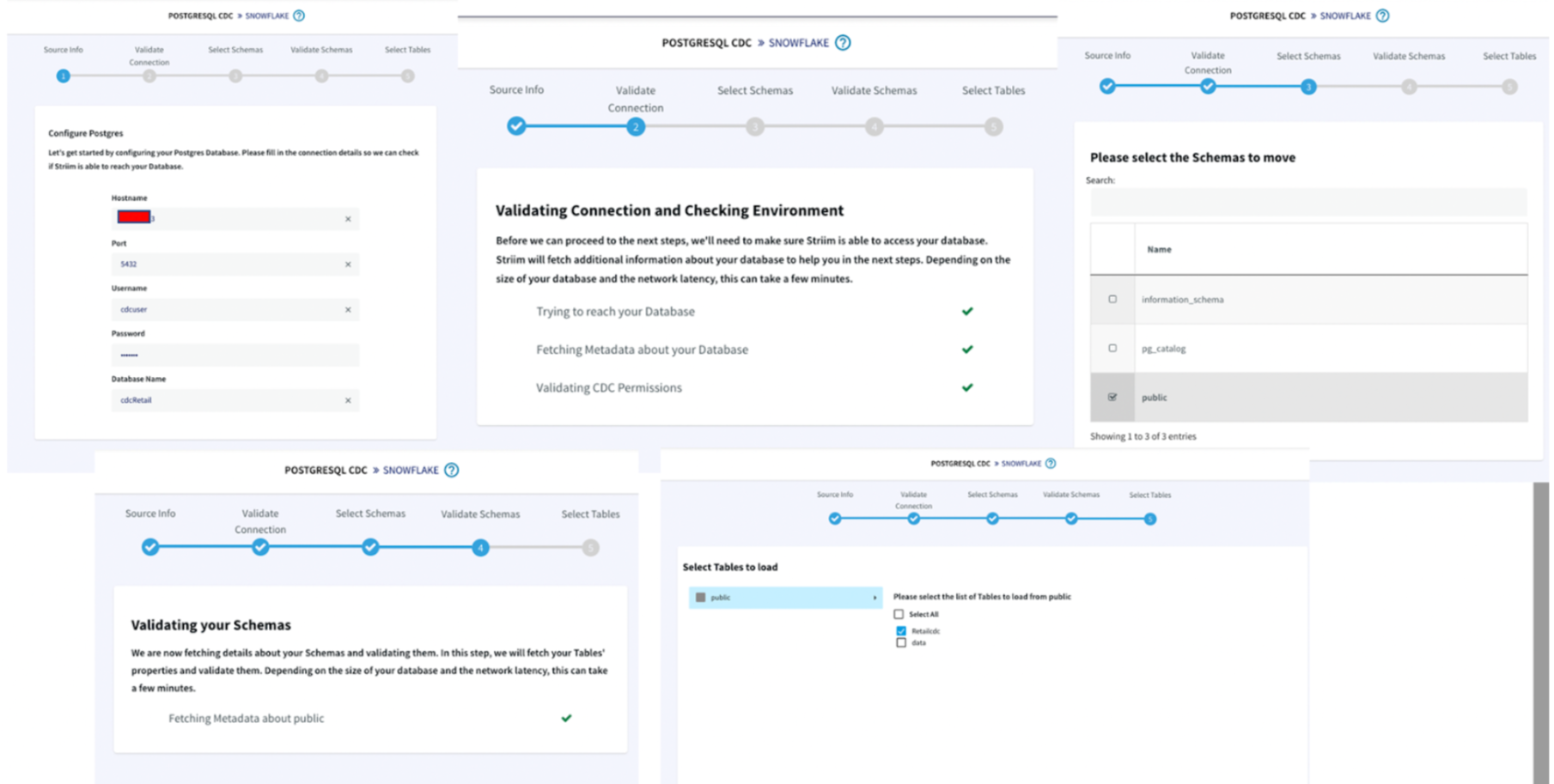

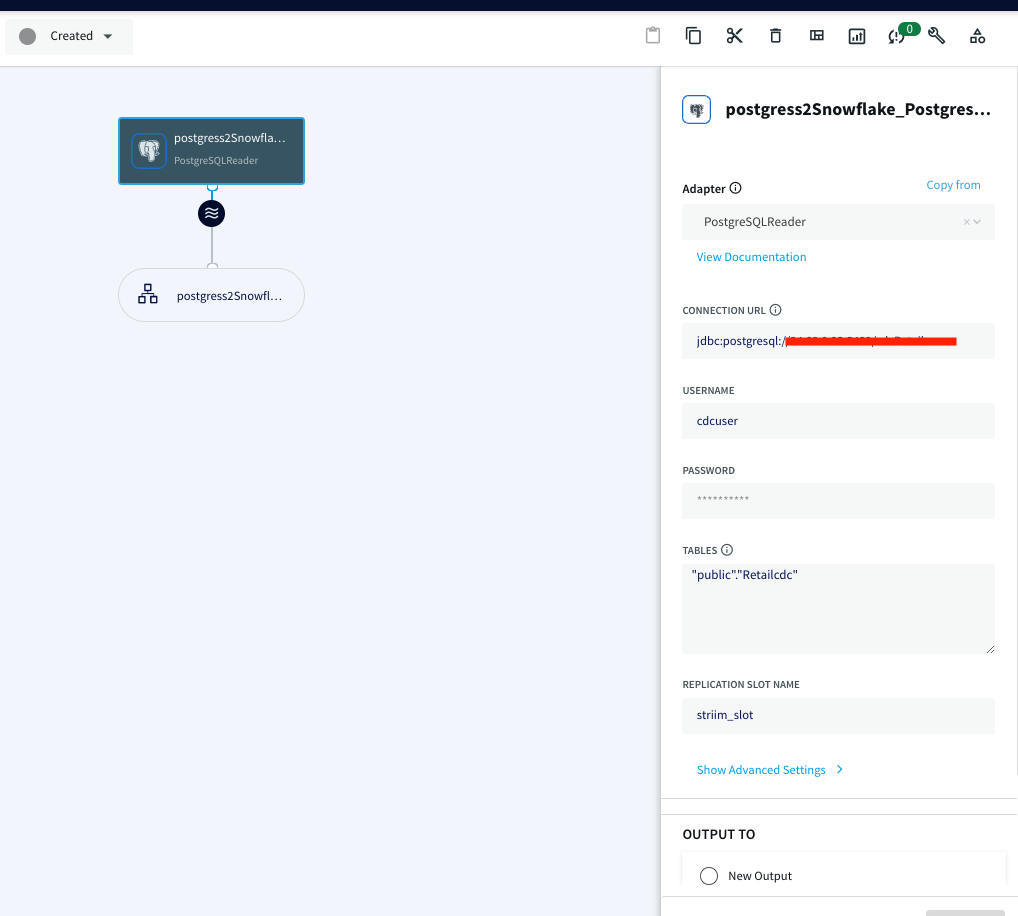

Give a name to your app and establish the connection between striim server and postgres instance.

Step 1 :

- Hostname: IP address of postgres instance

- Port : For postgres, port is 5432

- Username & Password: User with replication attribute that has access to source database

- Database Name: Source Database

Step 2 :The wizard will check and validate the connection between source to striim server

Step 3 :Select the schema that will be replicated

Step 4 :The selected schema is validated

Step 5 :Select the tables to be streamed

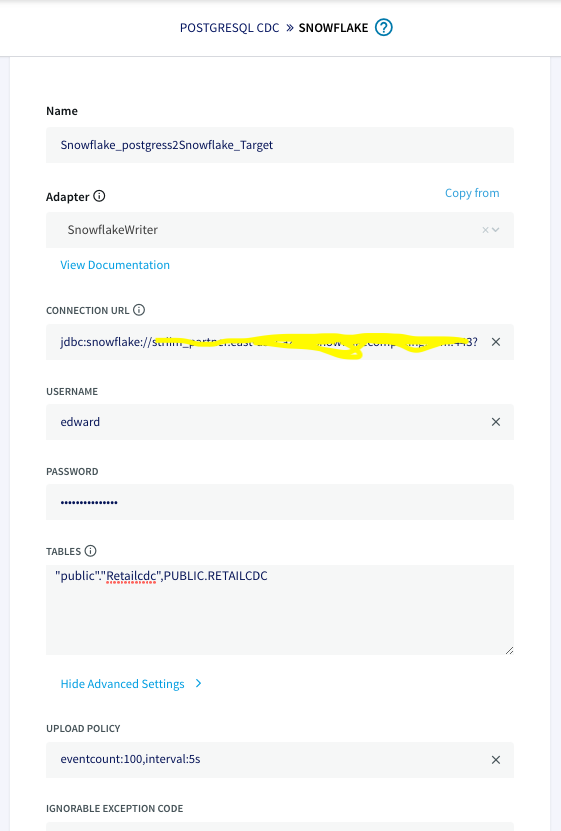

Once the connection with the source database and tables is established, we will configure the target where the data is replicated to.

The connection url has the following format:

jdbc:snowflake://YOUR_HOST-2.azure.snowflakecomputing.com:***?warehouse=warehouse_name &db=RETAILCDC&schema=public

After the source and targets are configured and connection is established successfully, the app is ready to stream change data capture on the source table and replicate it onto the target snowflake table. When there is an update on the source table, the updated data is streamed

through striim app to the target table on snowflake.

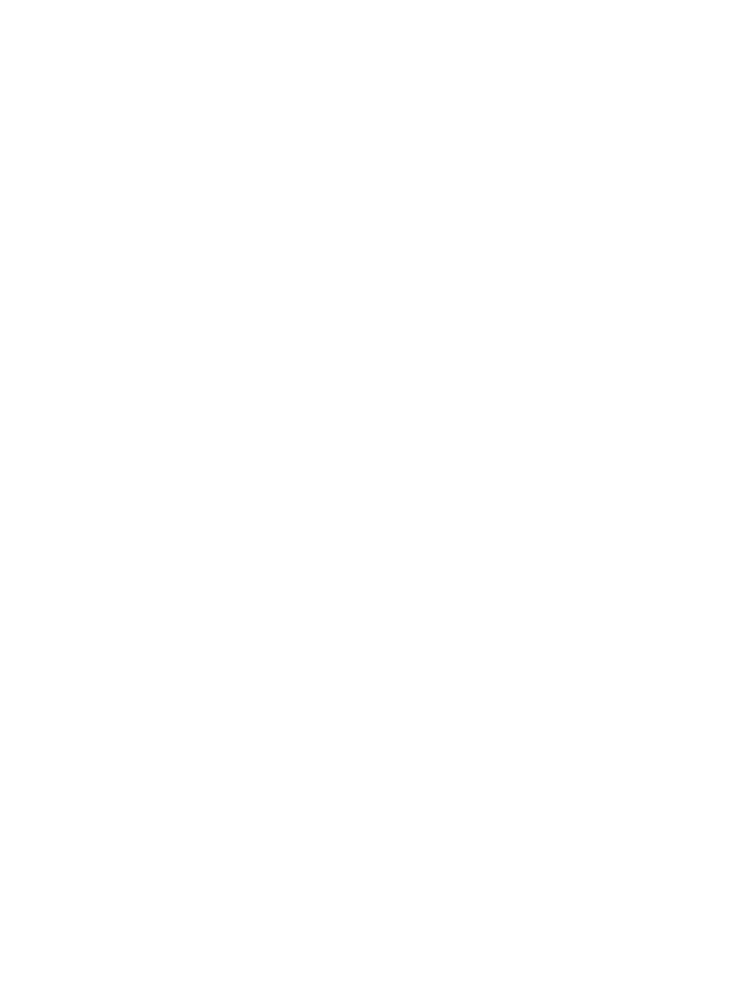

Step 2: Launch dbt cloud from Snowflake Partner Connect

Snowflake also provides dbt launches through Partner Connect. You can set up your dbt cloud account and project using this method. For more information on how to set up a fully fledged dbt account with your snowflake connection, managed repository and environments

please follow the steps in Snowflake’s dbt configuration page.

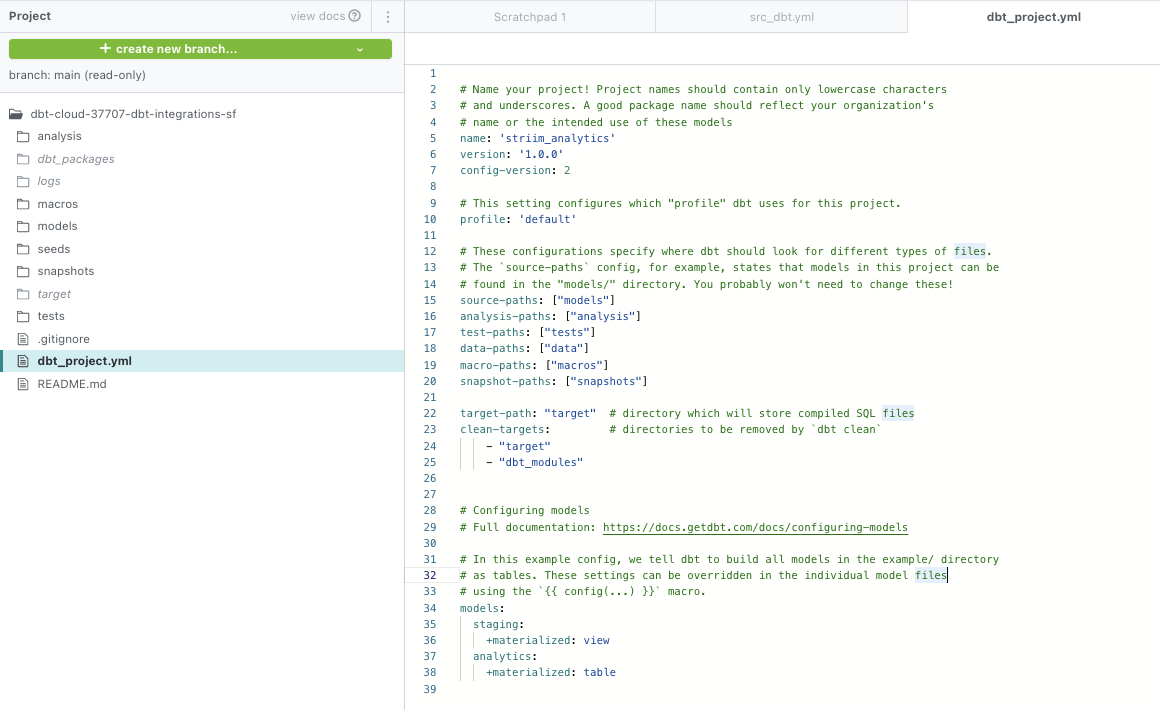

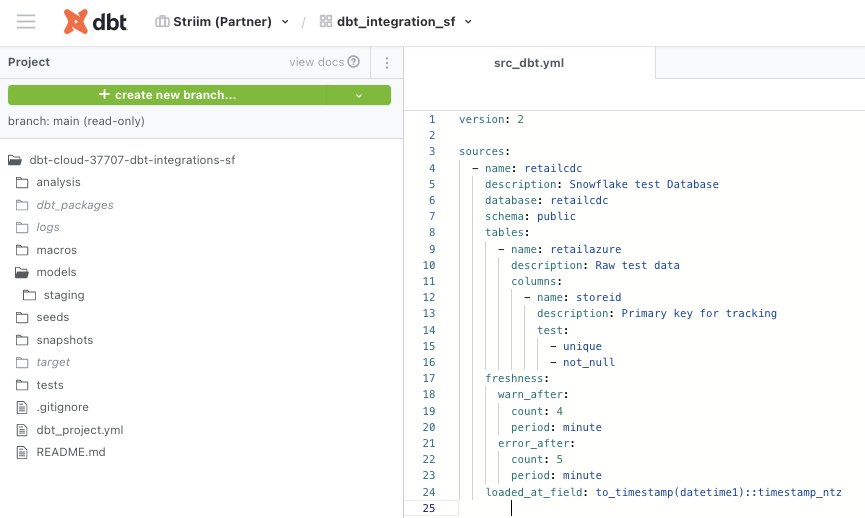

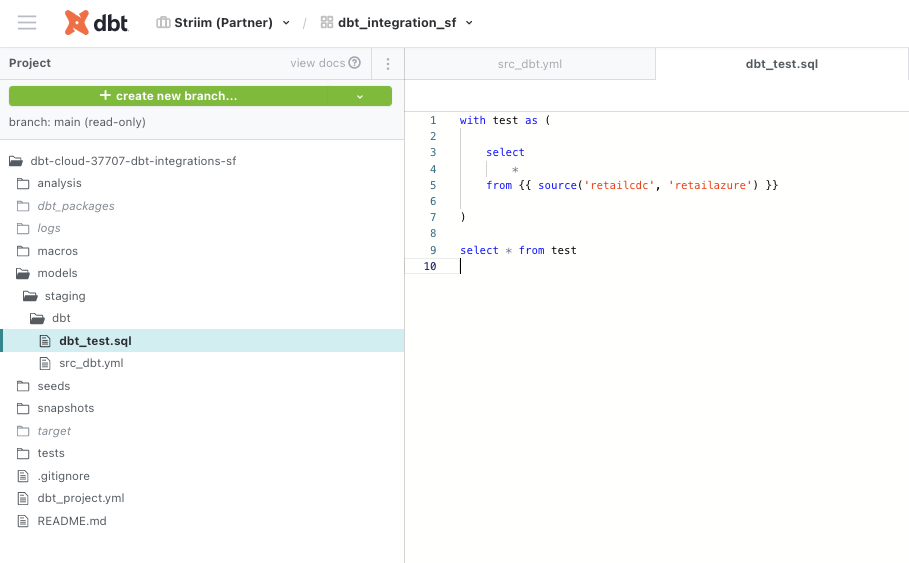

Step 3: Configure your project on cloud managed repository in dbt cloud

For information on how to set up the cloud managed repository, please refer to this documentation.

The dbt_project.yml, model yaml files and sql staging files for this project were configured as follows. Please follow this github repo to download the code.

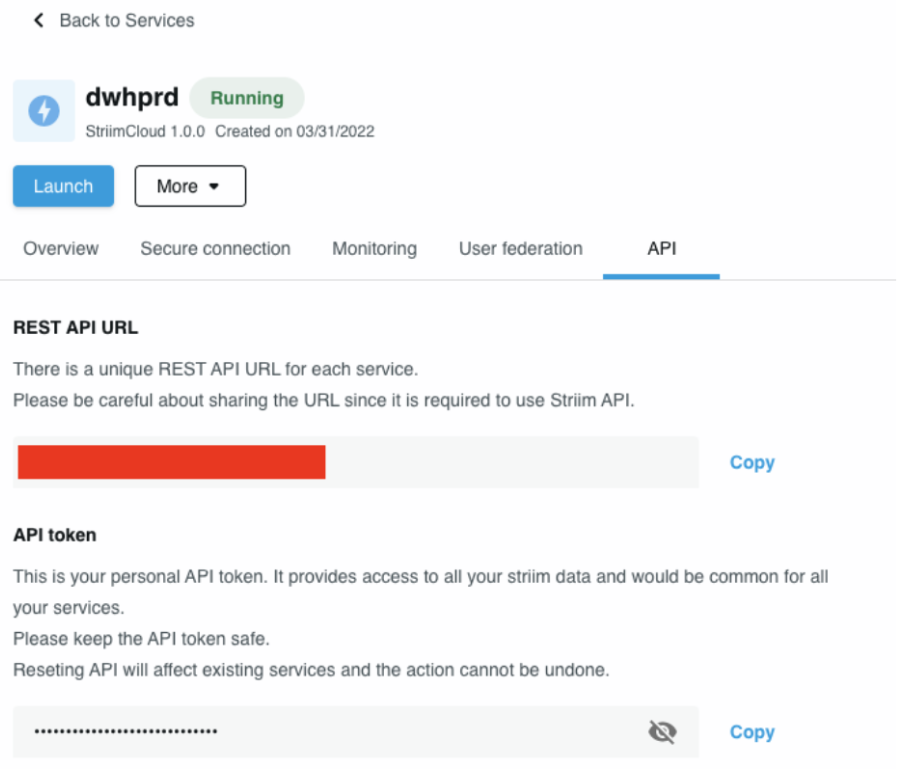

Step 4: Add Striim’s service API in the Python Script to fetch Striim app’s metadata

We will use python script to ping Striim’s service API to gather metadata from the Striim app. The metadata is compared against benchmarks to determine the SLAs defined for the project. The python script for this project can be downloaded from here.

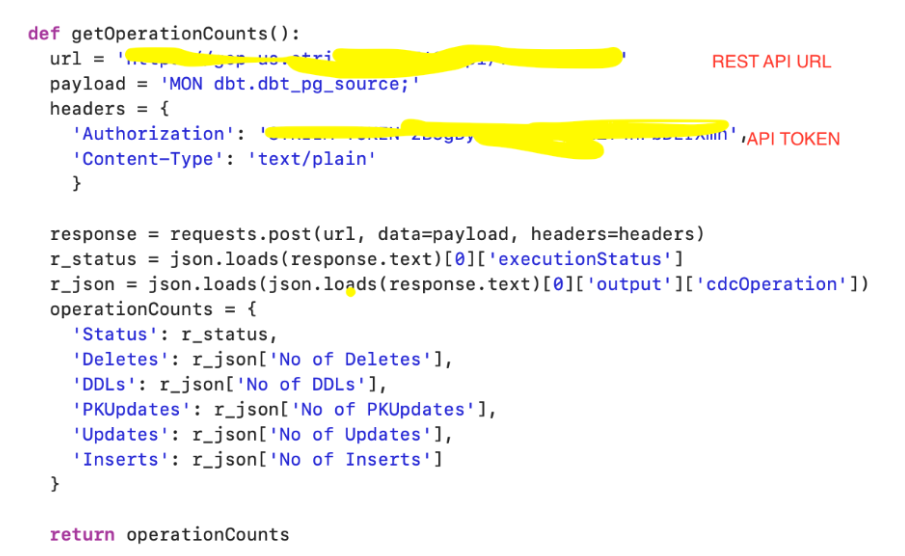

In the python script, enter the REST API URL as connection url and source name in payload.

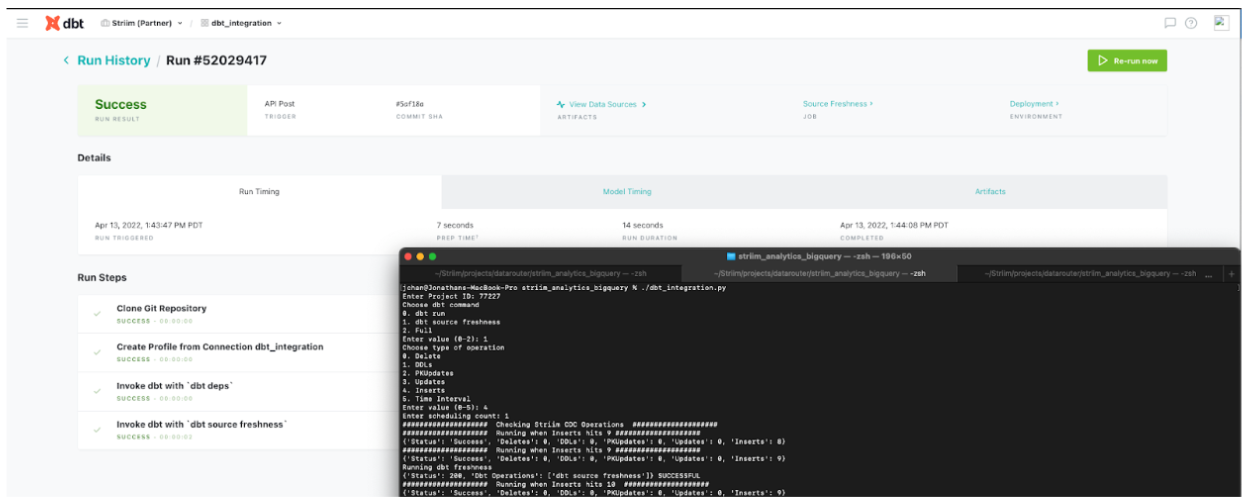

Step 5: Run the Python Script

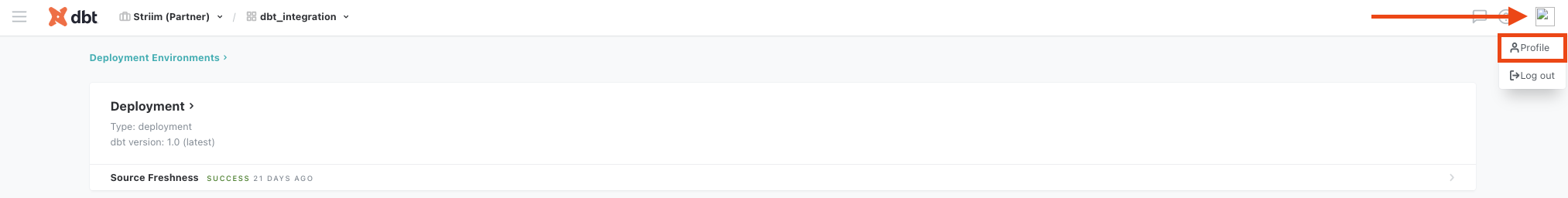

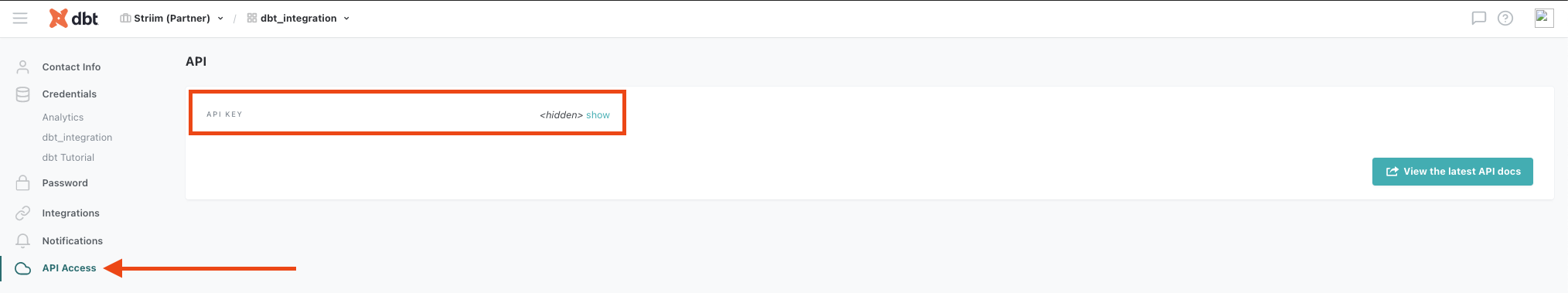

Once the dbt project is set up, the python script that hits the Striim Cloud Service url to get the metadata from striim server acts as a trigger to run dbt transformation and monitoring. To hit the dbt cloud API, the following commands are used. The account id and

job id can be retrieved from dbt cloud url. The authorization token can be found under API access on the left navigation bar.

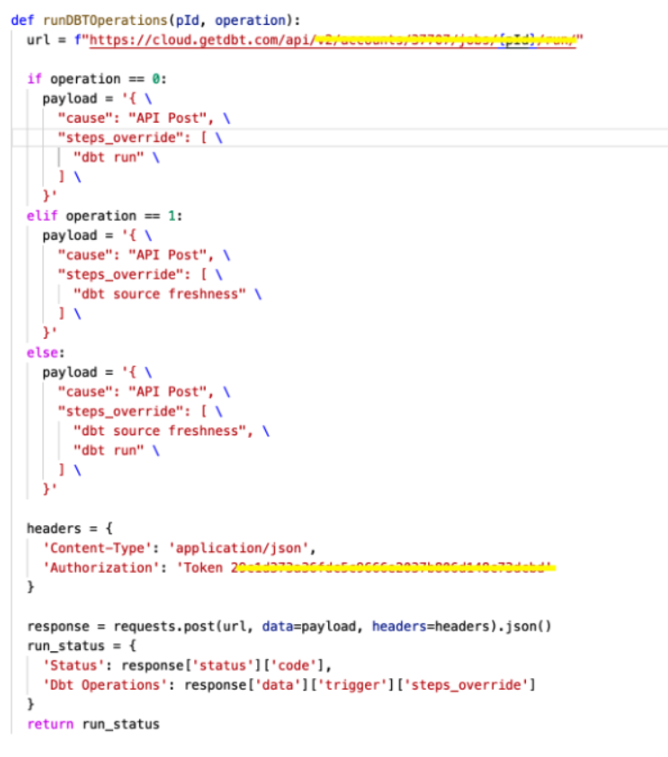

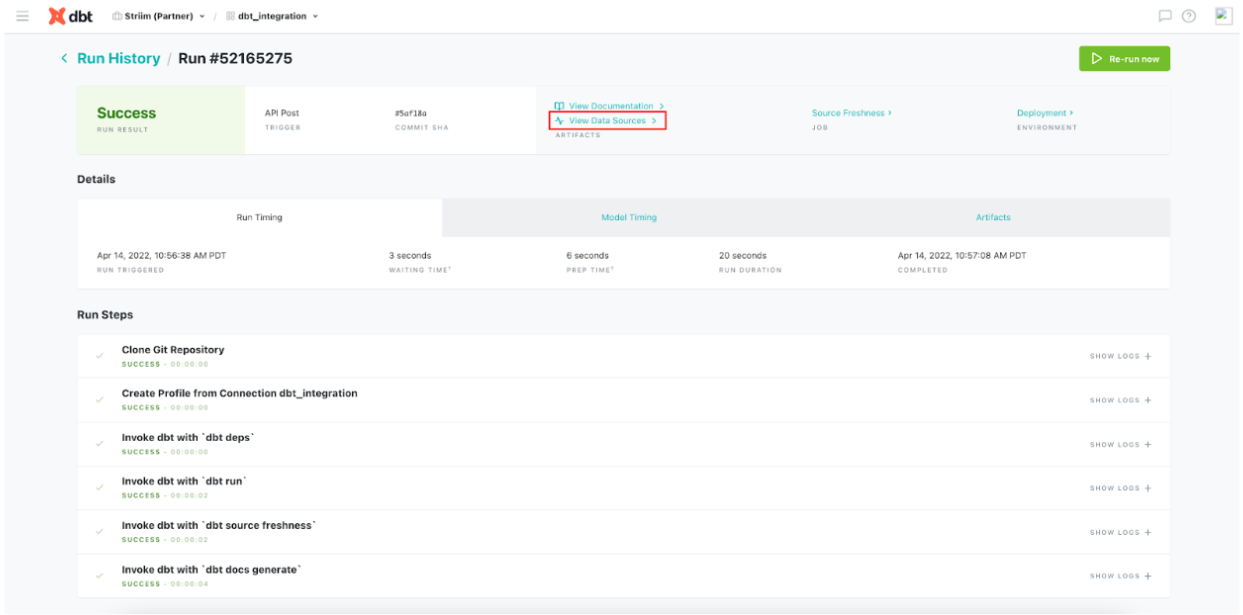

The following snapshots are from the dbt run that shows the inserts and source data freshness.

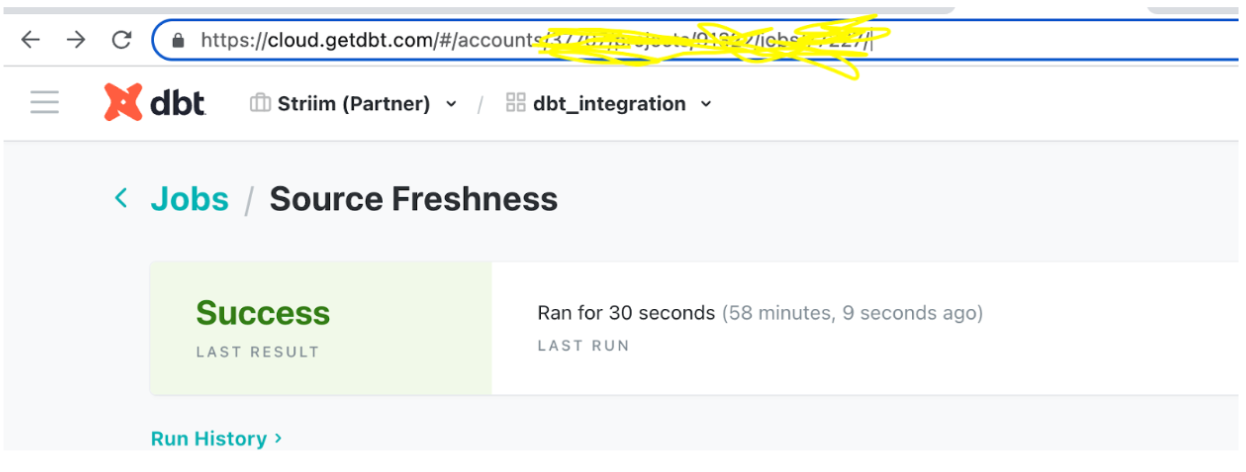

Enabling Source Freshness

To ensure you’re meeting data freshness SLAs for all your business stakeholders, you can monitor Source Freshness in dbt cloud.

Follow this document to enable source freshness of the real time data flowing from PostgreSQL through Striim to BigQuery. The source freshness snapshots can be checked under view data

source.

Video Walkthrough

Here is the video showing all the dbt run for the above tutorial.

Setting Up dbt and Striim

Step 1: Configure your dbt project

Configure your project on cloud managed repository in dbt cloud as shown in the recipe

Step 2: Edit the Python Script

Download the Python Script from our github repository and configure the endpoints

Download the TQL file and dataset from github repo and configure your source and target

Deploy and run the Striim app for data replication

Run the Python Script and enable source freshness on dbt to monitor data SLAs

Wrapping Up: Start Your Free Trial

Our tutorial showed you how a striim app can run with dbt, an open source data transformation and monitoring tool. With this feature you can monitor your data without interrupting the real-time streaming through Striim. dbt can be used with popular adapter

plugins like PostgreSQL, Redshift, Snowflake and BigQuery, all of which are supported by Striim. With Striim’s integration with major databases and data warehouses and powerful CDC capabilities, data streaming and analytics becomes very fast and efficient.

As always, feel free to reach out to our integration experts to schedule a demo, or try Striim for free here.

Tools you need

Striim

Striim’s unified data integration and streaming platform connects clouds, data and applications.

Snowflake

Snowflake is a cloud-native relational data warehouse that offers flexible and scalable architecture for storage, compute and cloud services.

PostgreSQL

PostgreSQL is an open-source relational database management system.

dbt cloud

dbt™ is a transformation workflow that lets teams quickly and collaboratively deploy analytics code following software engineering best practices like modularity, portability, CI/CD, and documentation.