Deploy and Run Striim on Google Kubernetes Engine

Integrate Striim for data streaming in your containerized application

Benefits

Manage Scalable Applications

Integrate Striim with your application inside Kubernetes Engine

Capture Data Updates in real time

Use Striim’s postgrescdc reader for real time data updates

Overview

Kubernetes is a popular tool for creating scalable applications due to its flexibility and delivery speed. When you are developing a data-driven application that requires fast real-time data streaming, it is important to utilize a tool that does the job efficiently. This is when

Striim patches into your system. Striim is a unified data streaming and integration product that offers change capture (CDC) enabling continuous replication from popular databases such as Oracle, SQLServer, PostgreSQL and many others to target data warehouses like BigQuery and Snowflake.

In this tutorial we have shown how to run a Striim application in Kubernetes cluster that streams data from Postgres to Bigquery in real time. We have also discussed how to monitor and access Striim’s logs and poll Striim’s Rest API to regulate the data stream.

Core Striim Components

PostgreSQL CDC: PostgreSQL Reader uses the wal2json plugin to read PostgreSQL change data. 1.x releases of wal2jon can not read transactions larger than 1 GB.

Stream: A stream passes one component’s output to one or more other components. For example, a simple flow that only writes to a file might have this sequence

BigQueryWriter: Striim’s BigQueryWriter writes the data from various supported sources into Google’s BigQuery data warehouse to support real time data warehousing and reporting.

Step 1: Deploy Striim on Google Kubernetes Engine

Follow the steps below to configure your Kubernetes cluster and start the required pods:

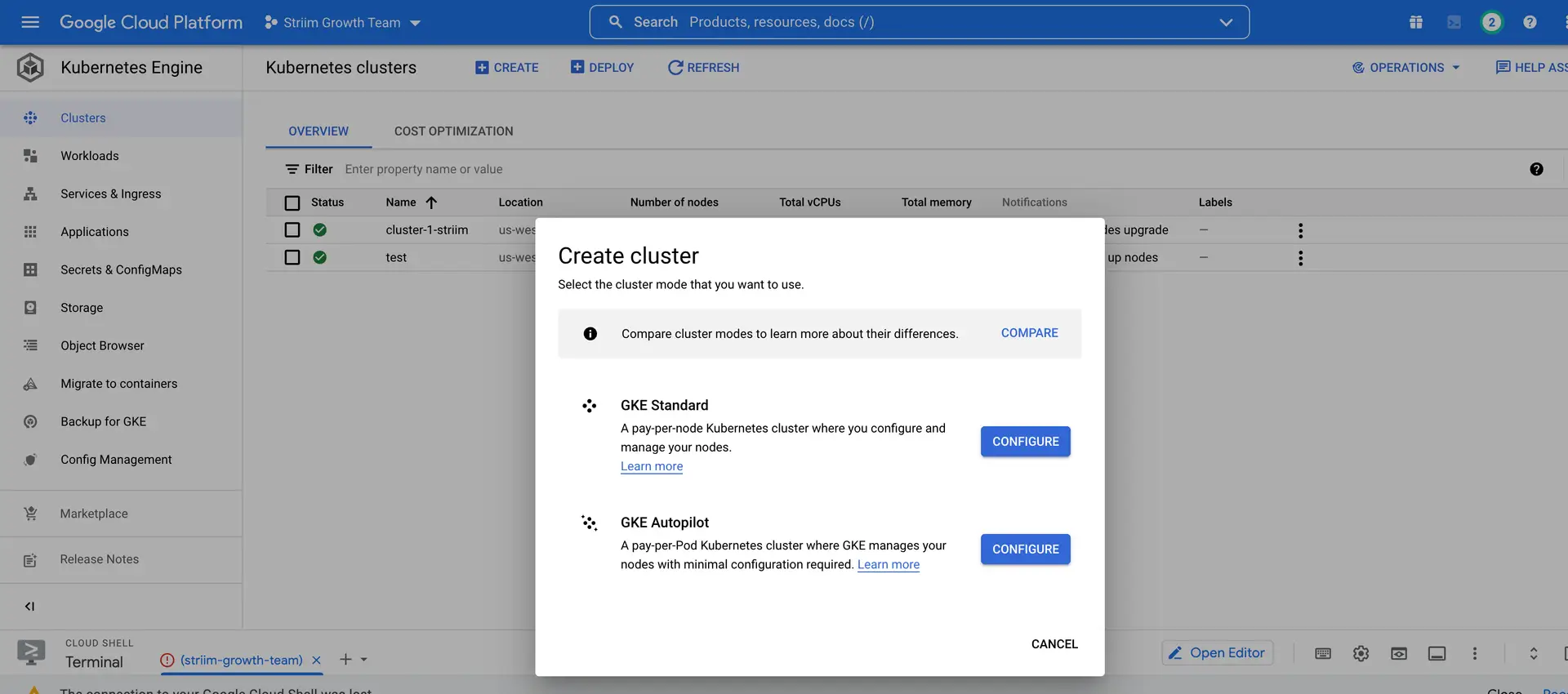

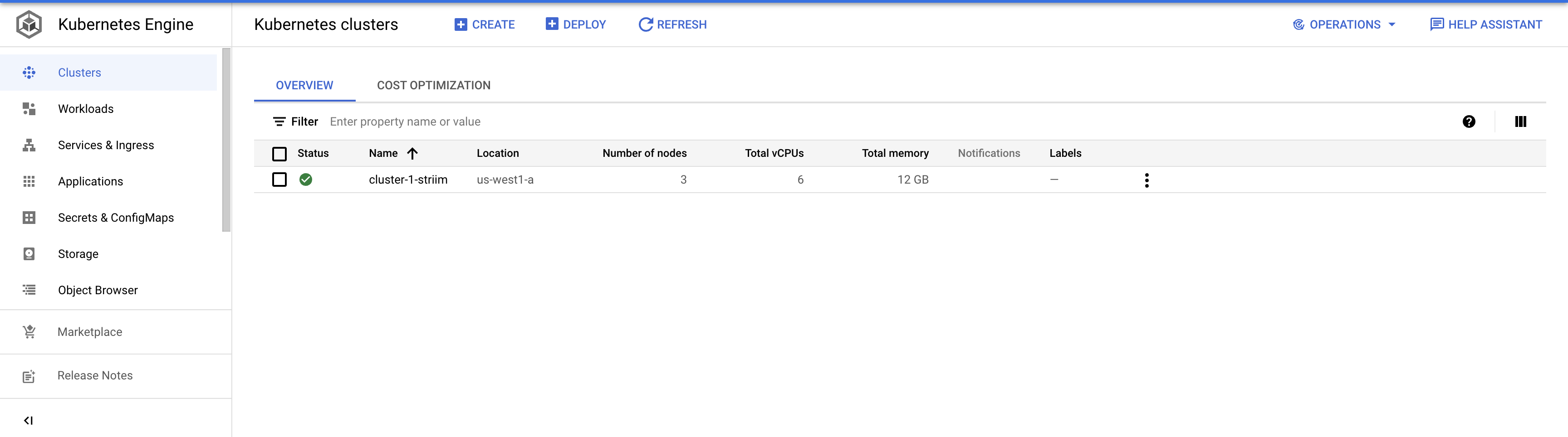

- Create a cluster on GKE that will run the Striim-node and striim-metadata pods.

- On your GKE, click clusters and configure a cluster with the desired number of nodes. Once the cluster is created, run the following command to connect the cluster.

gcloud container clusters get-credentials <YOUR_CLUSTER_NAME> --zone <YOUR_COMPUTE_ZONE>

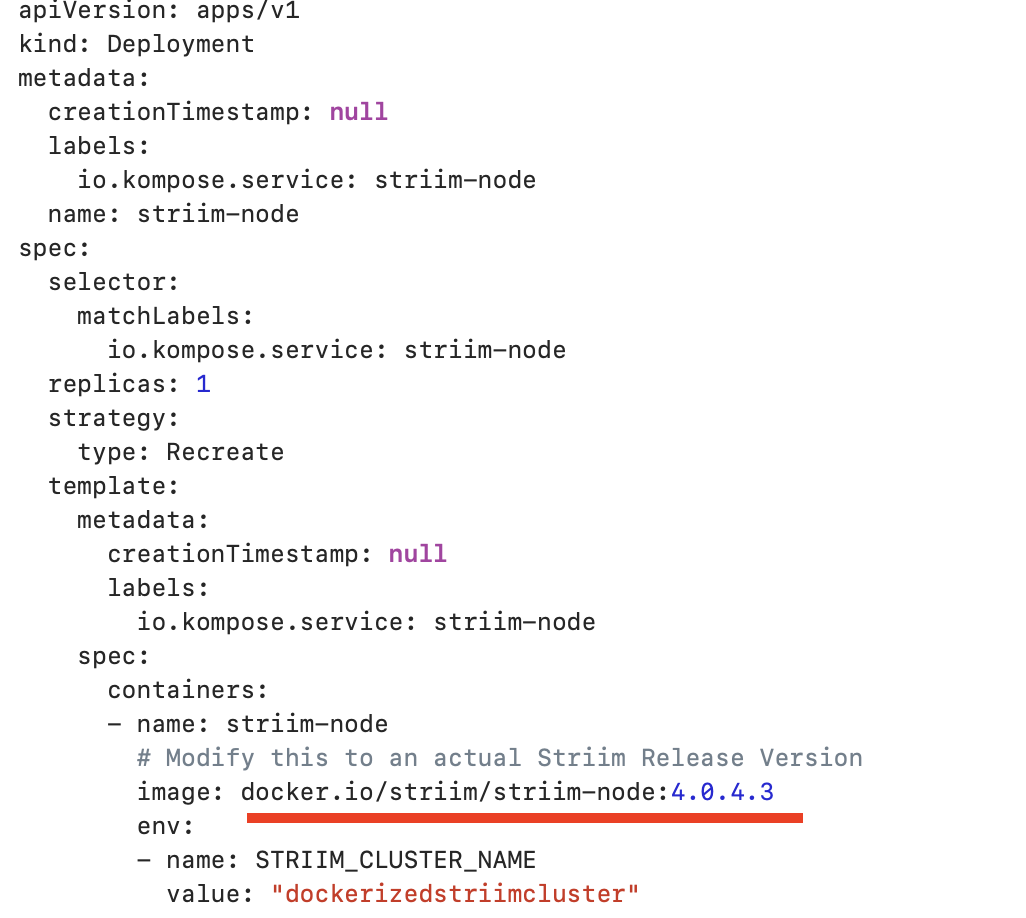

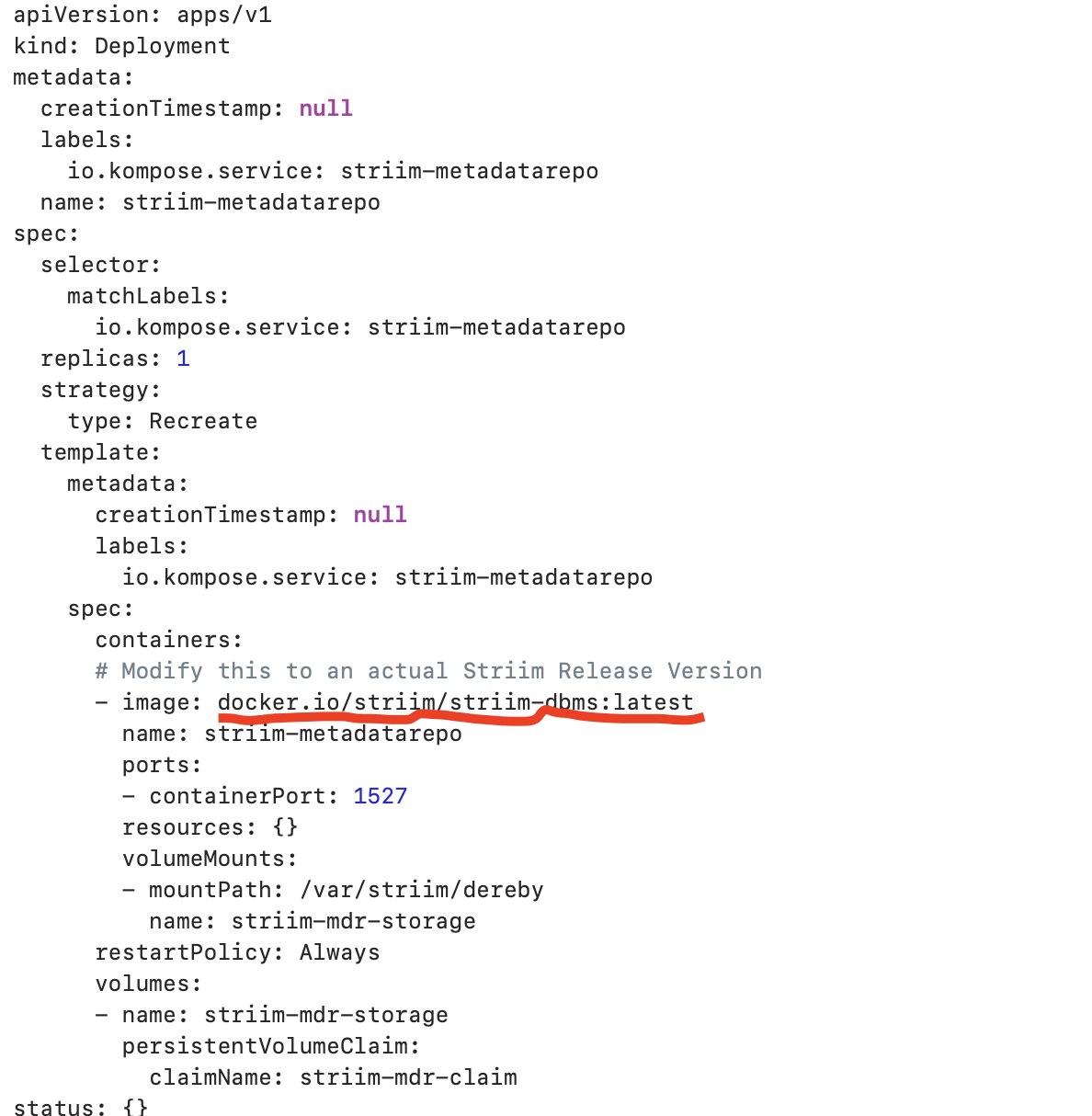

- Configure the yaml file to run docker container inside K8 cluster.You can find a sample yaml file here that deploys striim-node and metadata containers. Modify the tags of striim-dbms and striim-node image with

the latest version as shown below. Modify COMPANY_NAME, FIRST_NAME, LAST_NAME and COMPANY_EMAIL_ADDRESS for the 7-days free trial use or if you have a license key, you can modify the license key section from yaml file.

Upload the yaml file to your google cloud.

Run the following command to deploy with the yaml file. The pods will take some time to start and run successfully:

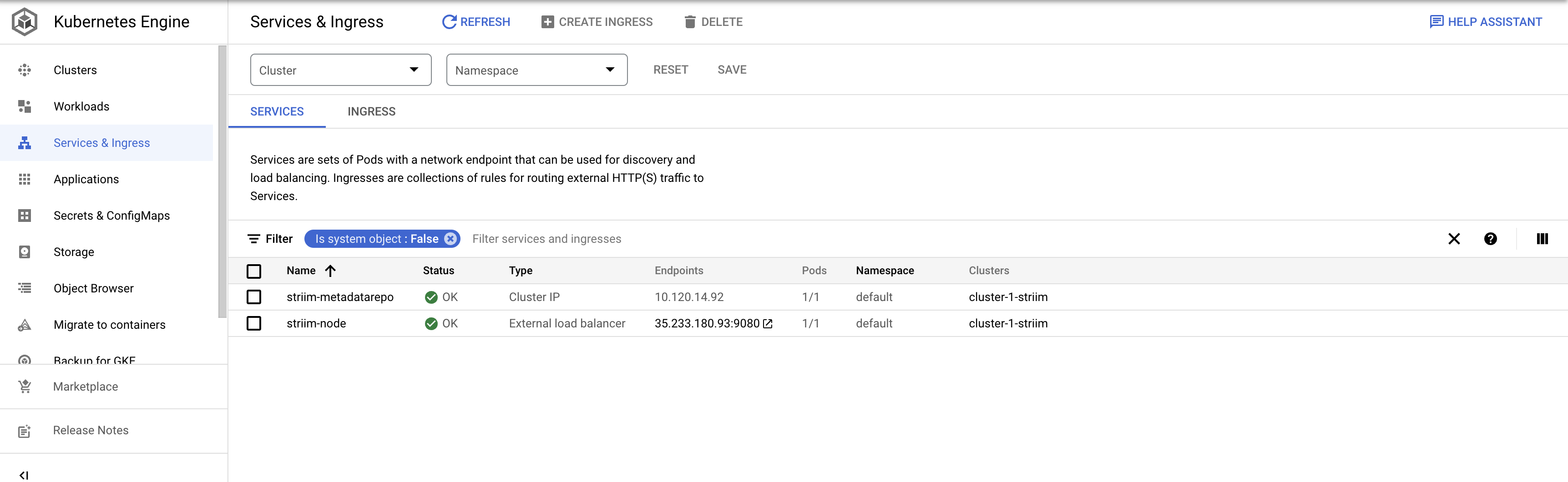

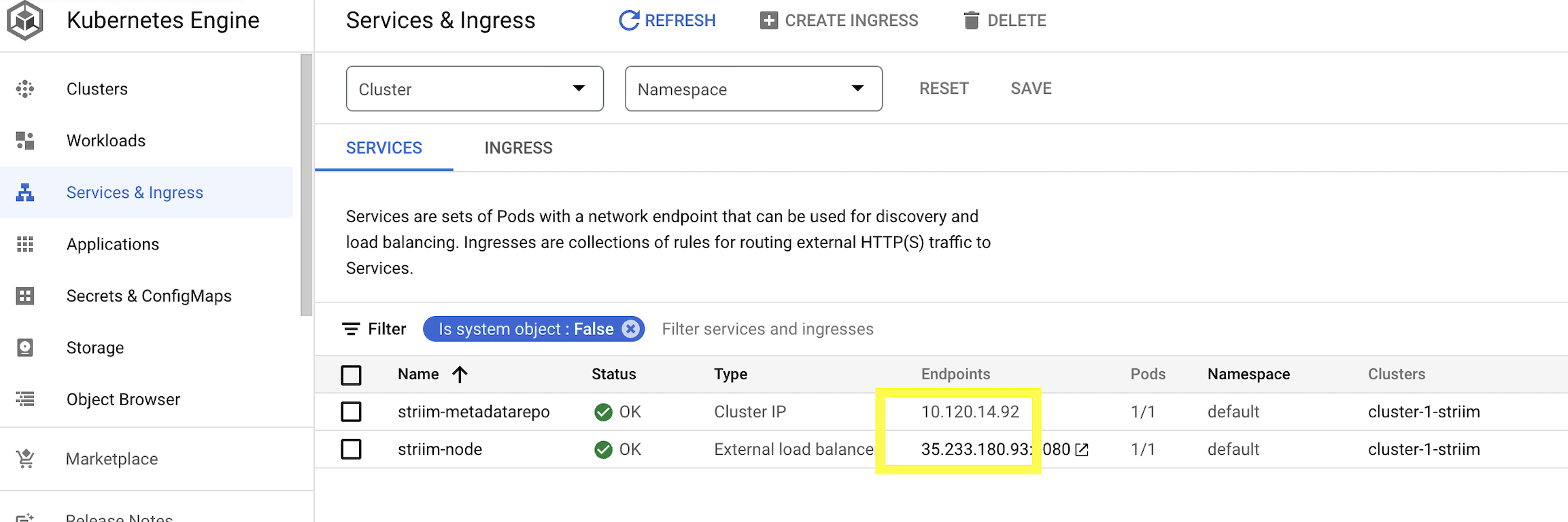

kubectl create -f {YAML_FILE_NAME>Go to Services & Ingress to check if the pods are created successfully. The OK status indicate the pods are up and running

Step 2: Configure the KeyStore Password

Enter the pod running Striim-node by running the following command.

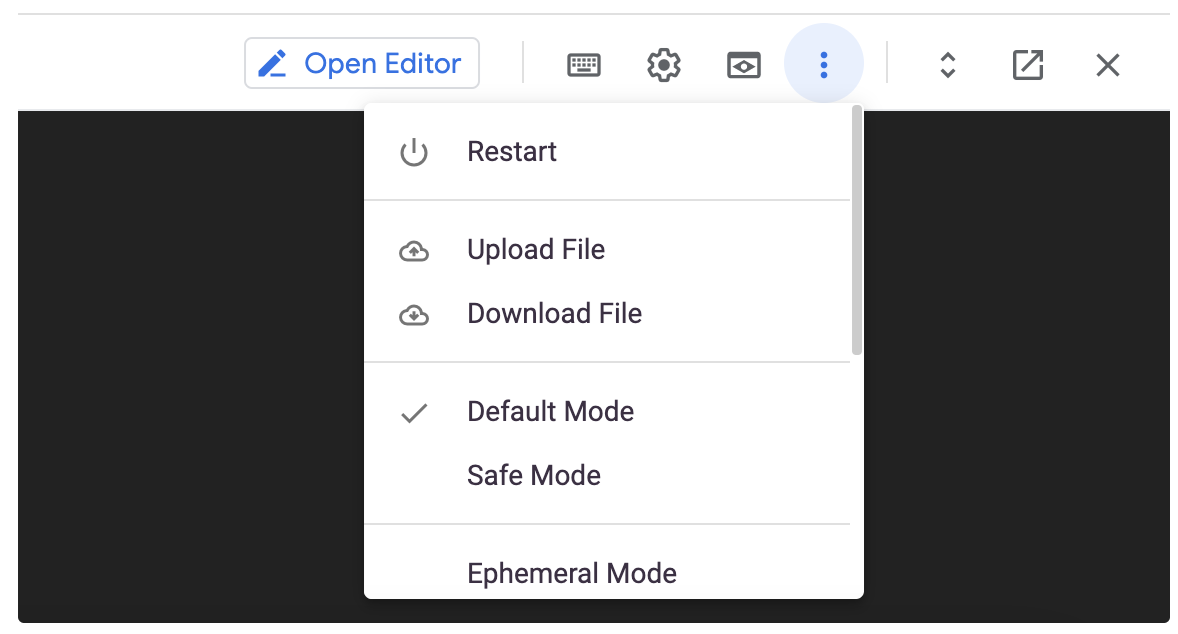

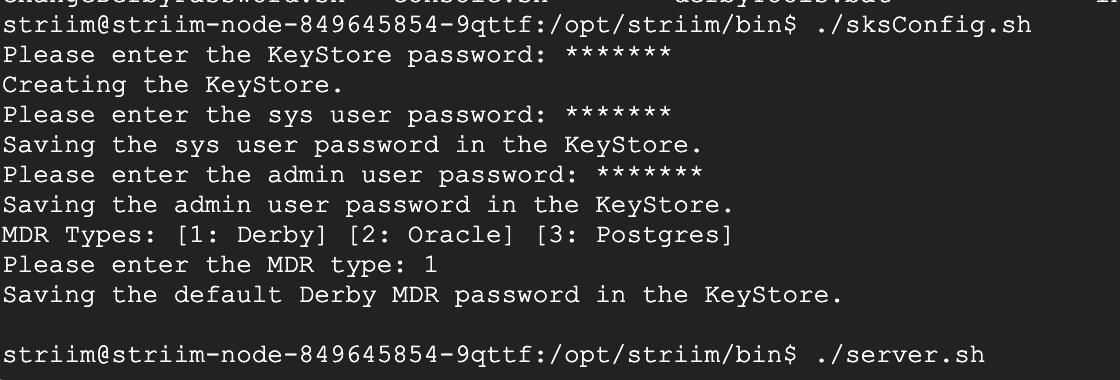

Kubectl logs {striim-node-***pod name}Enter the directory /opt/striim/bin/ and run the sksConfig.sh file to set the KeyStore passwords.

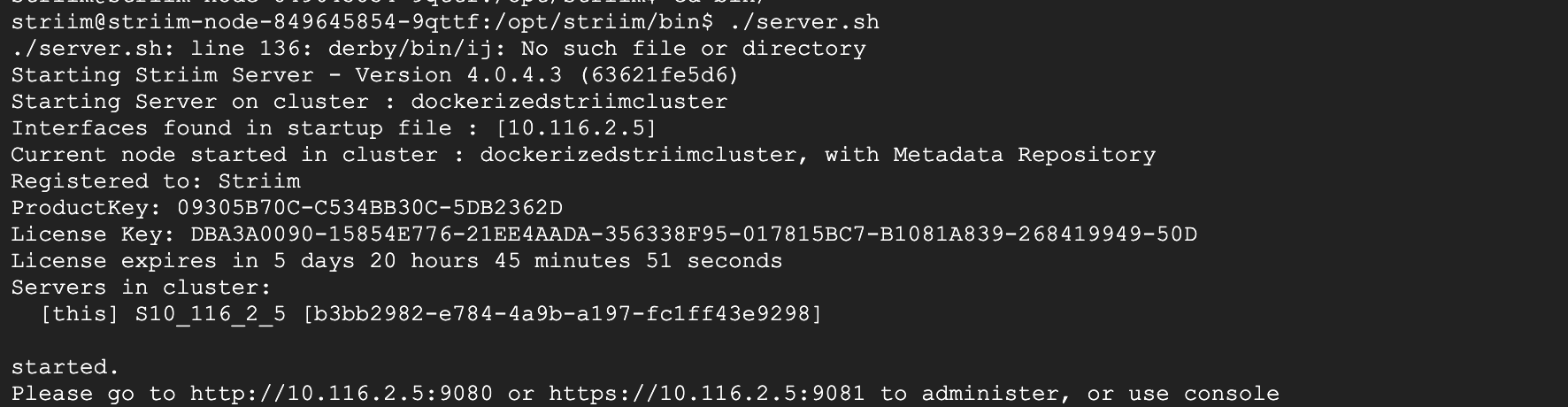

Run the server.sh file to launch Striim server through the K8 cluster. When prompted for cluster name, enter dockerizedstriimcluster or the name of cluster from yaml file.

Step 3: Access Striim Server UI

To create and run data streaming applications from UI, click on the Endpoint of strim-node as shown below. This will redirect you to Striim User Interface.

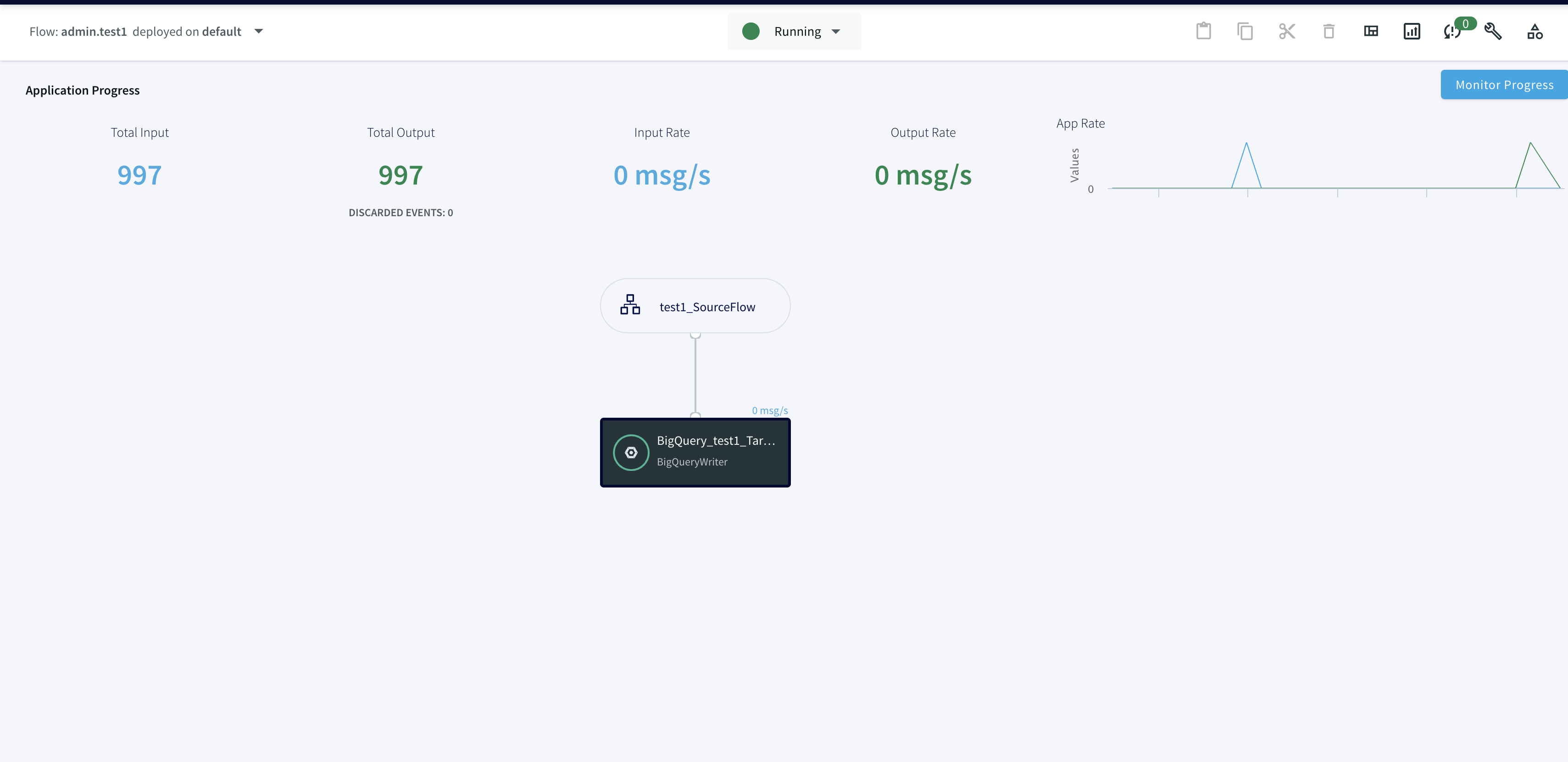

Step 4: Create and Run the postgres CDC to BigQuery streaming App

Once you are in the UI, you can follow the same steps shown in this recipe to create a postgres to Bigquery streaming app from wizard.

Monitoring Event logs and Polling Striim’s Rest API

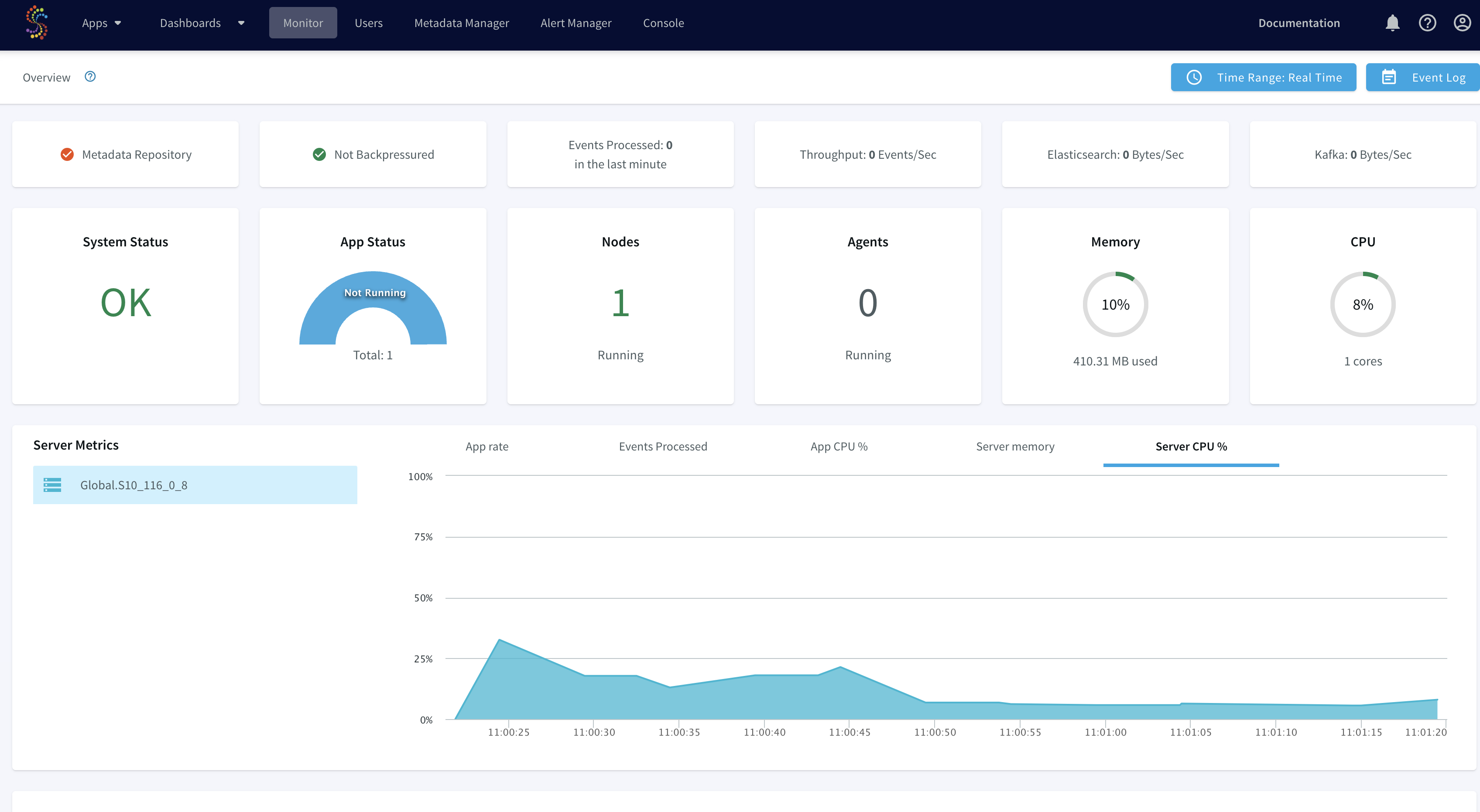

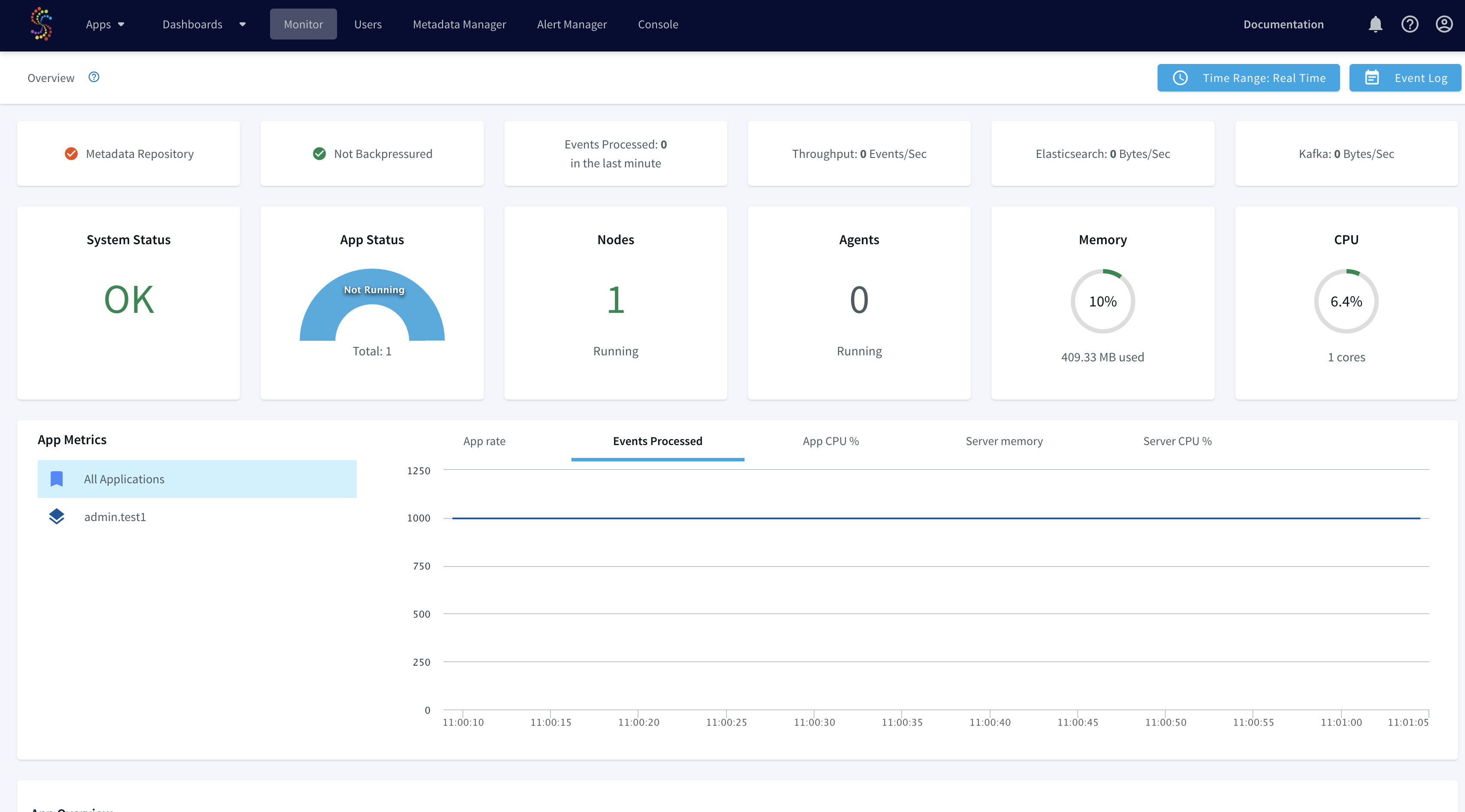

You can use the Monitor page in the web UI to retrieve summary information for the cluster and each of its applications, servers and agent. To learn more about the monitoring guide, please refer to this documentation.

You can also poll Striim’s rest API to access the data stream for monitoring the SLAs of data flow. For example, integrating the application with dbt to ensure if source data freshness is meeting the SLAs defined for the project. An authentication token must be included in

all REST API calls using the

token parameter. You can get a token using any REST client. The CLI command to request a token is:.curl -X POST -d'username=admin&password=******' http://{server IP}:9080/security/authenticate</code>gcloud container clusters get-credentials</code>curl -X POST

-d'username=admin&password=******' http://34.127.3.58:9080/security/authenticate

{"token":"01ecc591-****-1fe1-9448-4640d**0e52*"}sweta_prabha@cloudshell:~ (striim-growth-team)$

</code>To learn more about Striim’s Rest API, refer to the API guide, r from Striim’s documentation.

Deploying Striim on Google Kubernetes Engine

Step 1: Deploy Striim on Google Kubernetes using YAML file

You can find the YAML file here. Make necessary changes to deploy Striim on Kubernetes

Step 2: Configure the KeyStore Password

Please follow the recipe to configure keystore password

Step 3: Create the Striim app on Striim server deployed using Kubernetes

Use the app wizard from UI to create a Striim app as shown in the recipe

Deploy and run real-time data streaming app

Wrapping Up: Start Your Free Trial

Our tutorial showed you how a striim app can be run and deployed in Google Kubernetes cluster, a widely used container orchestration tool. Now you can integrate Striim with scalable applications managed within K8 clusters. With Striim’s integration with major

databases

and data warehouses and powerful CDC capabilities, data streaming and analytics becomes very fast and efficient.As always, feel free to reach out to our integration experts to schedule a demo, or try Striim for free here.

Tools you need

Striim

Striim’s unified data integration and streaming platform connects clouds, data and applications.

PostgreSQL

PostgreSQL is an open-source relational database management system.

Kubernetes

Kubernetes is an open-source container orchestration tool for automatic deployment and scaling of containerized applications.

Google BigQuery

BigQuery is a serverless, highly scalable multicloud data warehouse.