Before stream processing became essential for businesses, batch processing was the standard. Today, batch processing can feel outdated—can you imagine having to book a ride-share hours in advance or playing online multiplayer games with significant delays? What about trading stocks based on prices that are minutes or hours old?

Fortunately, stream processing has transformed how we handle real-time data, eliminating these inefficiencies. To fully grasp why stream processing is crucial for modern businesses, it’s important to first understand batch processing. In this guide, we’ll explore the fundamentals of batch processing, compare batch processing vs stream processing, and provide a clear batch processing definition for your reference.

Batch Processing Definition: What is Batch Processing?

Batch processing involves collecting data over time and processing it in large, discrete chunks, or “batches.” This data is moved at scheduled intervals or once a specific amount has been gathered. In a batch processing system, data is accumulated, stored, and processed in bulk, typically during off-peak hours to reduce system impact and optimize resource usage.

Batch processing does still have various uses, including:

- Credit card transaction processing

- Maintaining an index of company files

- Processing electric consumption for billing purposes once monthly

“Batch will always have its place,” shares Benjamin Kennady, a Cloud Solutions Architect at Striim. “There are many situations and data sources where batch processing is the only technical option. This doesn’t negate the value that streaming can provide … but to say it’s outdated compared to streaming would be incorrect. Most organizations are going to require both.”

Batch processing, however, isn’t ideal for businesses that need to respond to real-time events—hence why its use cases are fairly limited. For immediate data handling, stream processing is the solution. Stream processing processes and transfers data as soon as it is collected, allowing businesses to act on current information without delay.

“There are many use cases where the current pipeline built using batch processing could be upgraded into a streaming process,” says Kennady. “Real time streaming unlocks potential use cases that aren’t available when using batch, but batch is relatively simpler to manage is one way to view the tradeoff.”

Batch Processing and Batch-Based Data Integration

When discussing batch processing, you’ll often hear the term batch-based data integration. While related, they differ slightly. Batch processing involves executing tasks on large volumes of data at scheduled intervals, such as generating reports or processing payroll. Batch-based data integration, however, specifically focuses on moving and consolidating data from various sources into a target system in batches. In short, batch-based data integration is a subset of batch processing, with its primary focus on unifying data across systems.

How does Batch Processing Work?

Logistically speaking, here’s how batch processing works.

1. Data collection occurs.

Batch processing begins with the collection of data over time from several sources. This data is stored in a staging area, and may include transactional records, logs, sensor data, inventory data, and more.

2. Batches are created.

Once you collect a predefined quantity of data, it gets assembled to form a batch. This batch could be made based on specific triggers, such as the end of a day’s transactions or reaching a certain data volume.

3. Batch processing occurs.

Your batches are processed as a singular unit. Processing includes executing data transformation tasks including aggregations, calculations, and conversions, which are required to produce the final output.

4. Results are transferred and stored.

After processing, the results are typically stored in a database or data warehouse. The processed data may be used for reporting, analysis, or other business functions.

The most important thing to remember about this process is that it is performed only at scheduled intervals. Depending on your business requirements and data volume, you can determine if you’d like this to occur daily, weekly, monthly, or as necessary.

Let’s dive deeper and compare batch processing vs stream processing to get a clearer understanding of key differences.

Batch Processing vs Stream Processing: What’s the Difference?

While batch processing and stream processing aim to achieve the same result—data processing and analysis—the way they go about doing so differs tremendously.

Batch processing:

- Processes data in bulk: Data is collected over time and processed in large, discrete batches, often at scheduled intervals (e.g., hourly, daily, or weekly).

- Latency is higher: Since data is processed in batches, there is an inherent delay between when data is collected and when it is analyzed or acted upon. This makes it suitable for tasks where real-time response isn’t critical.

- Inefficient for real-time needs: While batch processing can handle large volumes of data, it delays action by processing data in bulk at scheduled times, making it unsuitable for businesses that need real-time insights. This lag can lead to outdated information and missed opportunities.

Batch processing isn’t inherently bad; it’s effective for tasks like large-scale data aggregation or historical reporting where real-time updates aren’t critical. However, stream processing is a better fit in certain scenarios. For example, technologies like Change Data Capture (CDC) capture real-time data changes, while stream processing immediately processes and analyzes those changes. This makes stream processing ideal for use cases such as operational analytics and customer-facing applications, where stale data can lead to missed insights or a poor user experience.

Stream processing’s use cases include:

- Processes data in real-time: Stream processing continuously processes data as it’s collected, enabling immediate analysis and action. This capability is crucial for businesses that rely on up-to-the-minute insights to stay competitive, such as in fraud detection, stock trading, or personalized customer interactions.

- Low latency: Stream processing delivers results with minimal delay, providing businesses with real-time information to make timely and informed decisions. “Real time streaming and processing of data is most crucial for dynamic environments where low-latency data handling is required,” says Kennady. “This is vital for dynamic datasets that are continuously changing. Anywhere you have databases or datasets changing and you need a low latency replication solution is where you should consider a data streaming solution like Striim.” This speed is essential for applications where every second counts, ensuring rapid responses to critical events.

- Maximized system performance: While stream processing requires continuous system operation, this investment ensures that data is always up-to-date, empowering real-time decision-making and giving businesses a competitive edge in fast-paced industries. The always-on nature of stream processing ensures no opportunity is missed.

That being said, modern data streaming platforms, such as Striim, can still support batch processing should you choose to use it. “Batch still has its role in the modern world and Striim fully supports it via its initial load capabilities,” says Dmitriy Rudakov, Director of Solution Architecture at Striim.

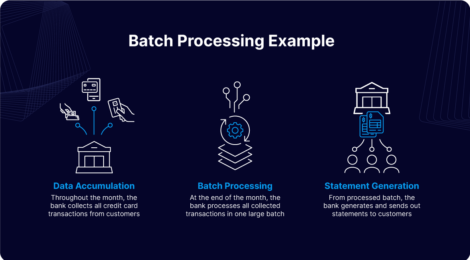

Batch Processing Example

Let’s walk through a batch processing example, using a bank for example. In a traditional banking setup, batch processing is often used to generate monthly credit card statements. It usually works like this:

- Data Accumulation: Throughout the month, the bank collects all credit card transactions from customers. These transactions include purchases, payments, and fees, which are stored in a staging area.

- Batch Processing: At the end of the month, the bank processes all collected transactions in one large batch. This involves calculating totals, applying interest rates, and preparing the statements for each customer.

- Statement Generation: After processing the batch, the bank generates and sends out the statements to customers.

Batch processing is well-suited for tasks like statement generation, where the process only needs to occur periodically, such as once a month. In this case, there’s no need for real-time updates, and the focus is on processing large volumes of data at scheduled intervals.

If we tried to use the same batch processing pipeline for a more operational use case like fraud detection, we’d face several challenges, including:

- Delayed Insights: Because transactions are processed in bulk at the end of the month, any discrepancies or issues, such as fraudulent charges, are only identified after the batch processing is complete. This delay means that customers or the bank may not detect and address issues until after they’ve had a significant impact.

- Missed Opportunities for Immediate Action: If a customer reports a suspicious transaction shortly after it occurs, the bank might not be able to take immediate action due to the delay inherent in batch processing. Real-time fraud detection and response are not possible, potentially allowing fraudulent activity to continue for weeks.

- Customer Dissatisfaction: Customers who experience issues with their transactions or statements must wait until the end of the month for resolution, leading to potential dissatisfaction and erosion of trust.

However, by leveraging stream processing instead, the bank gains the ability to analyze transactions as they occur, enabling real-time fraud detection, immediate customer notifications, and quicker resolution of issues. “In any use case where latency or speed is important, data engineers want to use steaming instead of batch processing,” shares Dmitriy Rudakov. “For example if you have a bank withdrawal and simultaneously there’s an audit check or some other need to see an accurate account balance.”

This approach ensures that both the bank and its customers can respond to and manage transactions in real-time, avoiding the delays and missed opportunities associated with batch processing. Through this batch processing example, you see why stream processing is imperative for modern businesses to utilize.

Stream Processing and Real-Time Data Integration

Often when discussing stream processing, real-time data integration is also a key topic—similar to how batch processing and batch-based data integration go hand-in-hand. These two concepts are closely related and work together to provide immediate insights and ensure synchronized data across systems.

Stream processing involves the continuous analysis of data as it flows in, allowing businesses to respond to events and trends in real time. It handles data streams instantaneously to deliver up-to-the-minute information and actions. Stream processing platforms are essential for businesses aiming to harness real-time data effectively. According to Dmitriy Rudakov, “Striim supports real-time streaming from all popular data sources such as files, messaging, and databases. It also provides an SQL like language that allows you to enhance your streaming pipelines with any transformations.”

Real-time data integration, on the other hand, ensures that the processed data is accurately and consistently updated across various systems and platforms. By integrating data in real-time, organizations synchronize their databases, applications, and data warehouses, ensuring that all components operate with the most current information. Together, stream processing and real-time data integration offer a unified approach to dynamic data management, significantly enhancing operational efficiency and decision-making capabilities.

Four Reasons You Need Real-Time Data Integration

Now that you understand why batch processing falls short for modern businesses seeking to gain real-time insights, respond swiftly to critical events, and optimize operational efficiency, it’s clear that adopting stream processing is essential for meeting these needs effectively. Here are four reasons real-time data integration is a must-have.

It enables quick, informed decision-making.

According to Statista, in July 2024, 67% of the global population were internet users, each producing ever-larger amounts of data. Real-time integration enables businesses to act on this information quickly.

Data from on-premises and cloud-based sources can easily be fed, in real-time, into cloud-based analytics built on, for instance, Kafka (including cloud-hosted versions such as Google PubSub, AWS Kinesis, Azure EventHub), Snowflake, or BigQuery, providing timely insights and allowing fast decision making.

The importance of speed can’t be understated. Detecting and blocking fraudulent credit card usage requires matching payment details with a set of predefined parameters in real time. If, in this case, data processing took hours or even minutes, fraudsters could get away with stolen funds. But real-time data integration allows banks to collect and analyze information rapidly and cancel suspicious transactions.

Companies that ship their products also need to make decisions quickly. They require up-to-date information on inventory levels so that customers don’t order out-of-stock products. Real-time data integration prevents this problem because all departments have access to continuously updated information, and customers are notified about sold-out goods.

Cumulatively, the result is enhanced operational efficiency. By ensuring timely and accurate data, businesses can not only respond to immediate issues but also optimize their operations for improved service delivery and strategic decision-making.

It breaks down data silos.

When dealing with data silos, real-time data integration is crucial. It connects data from disparate sources—such as Enterprise Resource Planning (ERP) software, Customer Relationship Management (CRM) software, Internet of Things (IoT) sensors, and log files—into a unified system with sub-second latency. This consolidation eliminates isolation, providing a comprehensive view of operations.

For example, in hospitals, real-time data integration links radiology units with other departments, ensuring that patient imaging data is instantly accessible to all relevant stakeholders. This improves visibility, enhances decision-making, and optimizes operational efficiency by breaking down data silos and delivering timely, accurate information.

It improves customer experience.

The best way to give customer experience a boost is by leveraging real-time data integration.

Your support reps can better serve customers by having data from various sources readily available. Agents with real-time access to purchase history, inventory levels, or account balances will delight customers with an up-to-the-minute understanding of their problems. Rapid data flows also allow companies to be creative with customer engagement. They can program their order management system to inform a CRM system to immediately engage customers who purchased products or services.

Better customer experiences translate into increased revenue, profits, and brand loyalty. Almost 75% of consumers say a good experience is critical for brand loyalties, while most businesses consider customer experience as a competitive differentiator vital for their survival and growth.

It boosts productivity.

Spotting inefficiencies and taking corrective actions is crucial for modern companies. Having access to real-time data and continuously updated dashboards is essential for this purpose. Relying on periodically refreshed data can slow progress, causing delays in problem identification and leading to unnecessary costs and increased waste.

Optimizing business productivity hinges on the ability to collect, transfer, and analyze data in real time. Many companies recognize this need. According to an IBM study, businesses expect that rapid data access will lead to better-informed decisions (44%).

Real-Time Data Integration Requires New Technology: Try Striim

Real-time data integration involves processing and transferring data as soon as it’s collected, utilizing advanced technologies such as Change Data Capture (CDC), and in-flight transformations. Luckily, Striim can help. Striim’s CDC tracks changes in a database’s logs, converting inserts, updates, and other events into a continuous data stream that updates a target database. This ensures that the most current data is always available for analysis and action. Transform-in-flight is another key feature of Striim’s that enables data to be formatted and enriched as it moves through the system. This capability ensures that data is delivered in a ready-to-use format, incorporating inputs from various sources and preparing it for immediate processing.

Striim leverages these technologies to provide seamless real-time data integration. By capturing data changes and transforming data in-flight, Striim delivers accurate, up-to-date information that supports efficient decision-making and operational excellence. Ready to ditch batch processing and experience the difference of stream processing and real-time data integration? Book a demo today and see for yourself how Striim can fuel better decision-making, enhanced customer experience, and beyond.