In today’s fast-paced world, staying ahead of the competition requires making decisions informed by the freshest data available — and quickly. That’s where real-time data integration comes into play. By seamlessly blending and updating information from numerous sources, businesses can guarantee their AI systems are fueled by the latest, most accurate data.

What is Real-Time Data Integration + Why is it Important?

Real-time data integration includes continuous and instantaneous processes for collecting, transforming, and distributing data across systems and applications. Here’s how real-time data integration is made possible:

- Data Ingestion: The process begins with the ingestion of data from various sources, including Internet of Things (IoT) devices, databases, and applications.

- Change Data Capture (CDC): Products such as Striim monitor and capture database changes from transaction logs in real time, recording updates, inserts, and deletes as they occur.

- Data Transformation: This step involves filtering, aggregating, enriching, and other processes to prepare data for business use.

- Event-driven Architecture: Leveraging event-driven architecture allows businesses to utilize streaming to publish and subscribe to events in real time, enabling rapid insights and responses.

Why is Real-Time Data Integration Important?

Real-time data integration is crucial because it ensures decisions are based on the most current information. Traditional batch processing, with its scheduled updates, is too slow for today’s rapidly changing digital landscape. Real-time data processing fills this gap, enabling businesses to gain a competitive edge by making timely and informed decisions.

Timely information can significantly impact business outcomes, which means real-time data processing and integration is not a nice to have, but rather, that your business’s success is hinged on whether or not you’re properly leveraging real-time data integration. Moreover, prioritizing real-time data integration allows businesses to gain a competitive advantage over competitors still utilizing the archaic process of batch processing.

What are 5 Real-Time Data Integration Strategies for AI?

Real-time data integration enables businesses to leverage AI to its fullest capacity, making thoughtful decisions based on timely, accurate data. Here are five key strategies.

Stream Processing

Stream processing is a critical component in today’s data-driven landscape, facilitating the continuous ingestion, transformation, and analysis of data streams from diverse sources in real time. Tools like Striim empower organizations to seamlessly collect, refine, and interpret data streams, enabling informed decision-making and fueling the capabilities of artificial intelligence systems.

In the realm of AI, the significance of stream processing cannot be overstated. The efficacy of AI programs hinges upon the quality and timeliness of data, making real-time processing indispensable for organizations striving to harness the power of AI effectively.

Use Cases for Stream Processing

Though stream processing has a multitude of compelling applications, two stand out prominently: Fraud detection and real-time analytics. Stream processing is indispensable in fraud detection as it enables instantaneous monitoring of transactions, allowing financial institutions to swiftly identify anomalies and respond proactively to fraudulent activities.

Similarly, in the domain of real-time analytics, stream processing plays a pivotal role in ensuring the continuous analysis of data streams, yielding fresh insights and facilitating prompt decision-making. These up-to-the-minute insights are invaluable assets for organizations navigating dynamic market landscapes and seeking a competitive edge.

Extract, Transform, Load in Real Time

Extract, Transform, Load, or ETL, is a vital process in data management. It involves extracting data from various sources, transforming it into a suitable format, and loading it into a data warehouse or comparable storage system. Traditionally, ETL processes were batch-oriented, operating at scheduled intervals, leading to outdated data. However, with real-time ETL, data processing occurs continuously as it’s generated, ensuring that storage systems contain current data.

Real-time ETL is crucial for successful AI systems because working with the latest data ensures accuracy and relevance. Without it, AI results may be outdated and inaccurate, hindering decision-making processes.

Use Cases for ETL in Real Time

Real-time ETL finds optimal application in data lakes or warehouses, ensuring organizations have access to continuously updated data from diverse sources. This empowers them with the resources needed for business intelligence, reporting, and decision-making.

For instance, in the financial services industry, real-time ETL enables banks to update data lakes with transactional data instantaneously. This facilitates real-time fraud detection and risk analysis, enhancing security and decision-making processes.

Data Visualization

Real-time data visualization involves sophisticated techniques and tools that cater to the advanced needs of data engineers. By leveraging platforms like Striim, data engineers can create dynamic dashboards that reflect live data insights, facilitating immediate decision-making.

In the realm of machine learning model monitoring, advanced dashboards provide powerful means to evaluate and visualize the performance of models in real-time. This allows data engineers to swiftly detect and address model drifts and anomalies. Additionally, specialized tools offer advanced visualization of machine learning performance metrics, such as feature distributions and prediction quality, ensuring robust model monitoring.

Complex Event Processing (CEP) is another key area where advanced data visualization techniques are applied. Solutions that combine real-time event processing with advanced visualization capabilities offer robust frameworks for identifying patterns in streaming data. This approach is ideal for handling high-velocity data streams and supports immediate analysis and response.

Use Cases for Data Visualization

Your team can develop operational dashboards leveraging data visualization via Striim to visualize key performance indicators (KPIs) and operational metrics in real-time. Incorporate drill-down capabilities and real-time alerts to monitor system health, performance bottlenecks, and operational efficiency metrics continuously.

Moreover, you can facilitate root cause analysis with data visualization. Use anomaly detection algorithms integrated with visualizations that highlight deviations from expected patterns, enabling data experts to investigate and mitigate issues promptly.

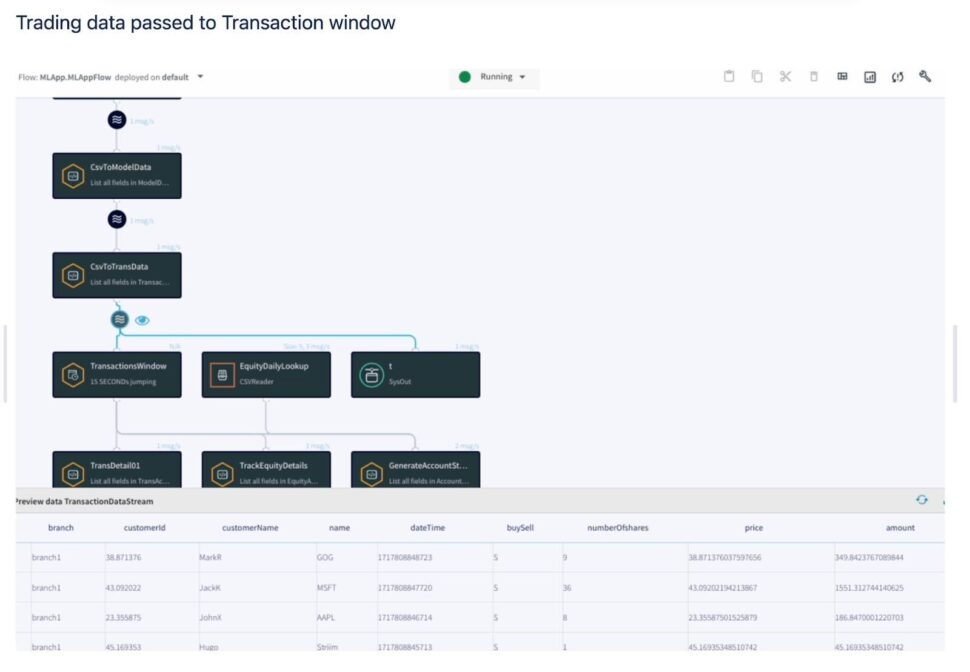

This Striim application is built to track trader activity and find fraud via AML rules and anomaly detection.

IoT Data Integration

IoT data integration involves the collection, consolidation, and analysis of data generated by IoT devices, ranging from smart gadgets to sensors, which produce vast amounts of real-time data.

This integration is indispensable for successful AI systems because IoT devices continuously provide data streams in real time. By incorporating this data into AI systems, teams ensure that their AI models have access to the latest information, crucial for decision-making applications. Additionally, IoT data integration enhances predictive capabilities, allowing AI systems to make more accurate predictions due to the extensive data available from IoT devices.

Use Cases for IoT Data Integration

The applications of IoT data integration are vast. In the healthcare industry, for example, integrating IoT data into AI enables remote patient monitoring. Wearable health monitors collect patients’ vital signs, and AI systems analyze this data to provide real-time insights into wellness, empowering healthcare providers to take proactive measures.

Another significant use case is in industrial settings. Utilizing IoT devices such as sensors in manufacturing allows organizations to monitor machinery and equipment. AI systems analyze data from these sensors to predict maintenance schedules, reducing downtime and preventing costly breakdowns.

API Integrations

In contemporary data integration strategies, API integrations play a crucial role. Application Programming Interfaces (APIs) enable disparate software systems to communicate effectively, making them invaluable for real-time data integration.

APIs provide a standardized method for accessing data from various sources, including databases, cloud applications, and more. This accessibility is essential for AI systems, which require vast amounts of data to train models and make accurate predictions. APIs facilitate the absorption of real-time data from multiple sources, ensuring that AI models are continuously updated.

Use Cases for API Integrations

API integrations offer numerous benefits for AI utilization. In a retail setting, for instance, real-time inventory management can be enhanced through API connections. Retail chains leveraging AI for decision-making and operational efficiency can use APIs to connect point of sale systems, inventory management systems, and e-commerce platforms. When a sale occurs, the API updates central inventory instantaneously, providing real-time sales and inventory data to AI systems for informed decision-making.

Another use case involves real-time data exchange and breaking down silos between systems. APIs facilitate interoperability between different systems, enabling seamless data exchange regardless of underlying technologies. This interoperability ensures that data is utilized effectively, contributing to thoroughly-trained and up-to-date AI models.

Dive into Real-Time Data Integration and Streaming with Striim

For organizations looking to elevate their data integration capabilities and maximize the potential of AI systems, Striim offers comprehensive solutions. With Striim, you can harness real-time data from its inception, enabling meaningful insights and informed decision-making. Try Striim today with a free trial and experience the transformative power of real-time data integration.