For those who need to migrate an Oracle database to Google Cloud, the ability to move mission-critical data in real-time between on-premises and cloud environments without either database downtime or data loss data is paramount. In this video Alok Pareek, Founder and EVP of Products at Striim demonstrates how the Striim platform enables Google Cloud users to build streaming data pipelines from their on-premises databases into their Cloud SQL environment with reliability, security, and scalability. The full 8-minute video is available to watch below:

Easy to Use

Striim offers an easy-to-use platform that maximizes the value gained from cloud initiatives; including cloud adoption, hybrid cloud data integration, and in-memory stream processing. This demonstration illustrates how Striim feeds real-time data from mission-critical applications from a variety of on-prem and cloud-based sources to Google Cloud without interruption of critical business operations.

Visualize Your Data

Through different interactive views, Striim users can develop Apps to build data pipelines to Google Cloud, create custom Dashboards to visualize their data, and Preview the Source data as it streams to ensure they’re getting the data they need. For this demonstration, Apps is the starting point from which to build the data pipeline.

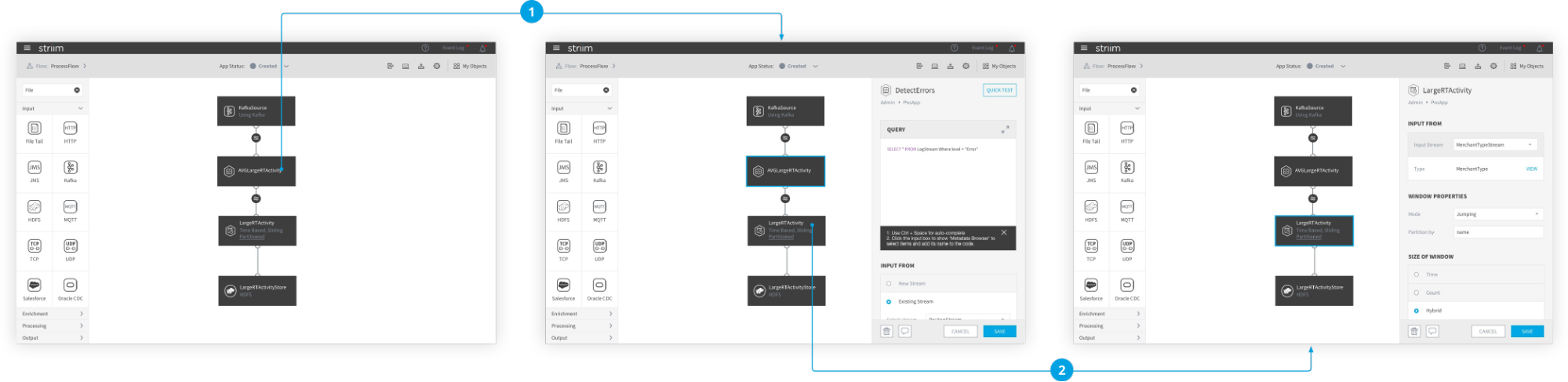

There are two critical phases in this zero-downtime data migration scenario. The first involves the initial load of data from the on-premise Oracle database into the Cloud SQL Postgres database. The second is the synchronization phase, achieved through specialized readers to keep the source and target consistent.

The pipeline from the source to the target is built using a flow designer that easily creates and modifies streaming data pipelines. The data can also be transformed while in motion, to be realigned or delivered in a different format. Through the interface, the properties of the Oracle database can also be configured – allowing users extensive flexibility in how the data is moved.

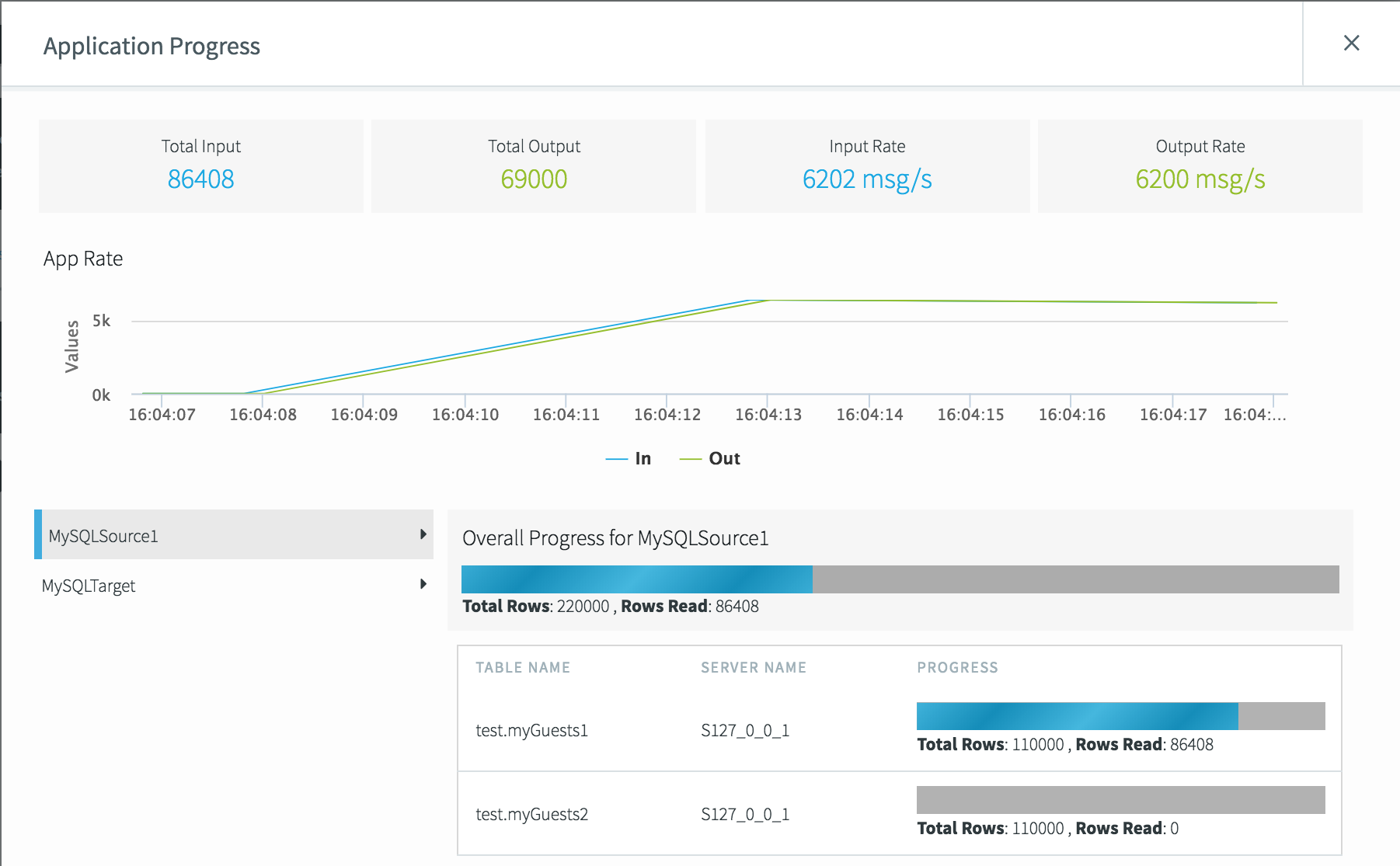

Once the application is started, the data can be previewed, and progress monitored. While in-motion, data can be filtered, transformed, aggregated, enriched, and analyzed before delivery. With up-to-the-second visibility of the data pipeline, users can quickly and easily verify the ingestion, processing, and delivery of their streaming data.

During the time of initial load, the source data in the database is continually changing. Striim keeps the Cloud SQL Postgres database up-to-date with the on-premises Oracle database using change data capture (CDC). By reading the database transactions in the Oracle redo logs, Striim collects the insert, update, and delete operations as soon as the transactions commit, and makes only the changes to the target, This is done without impacting the performance of source systems, while avoiding any outage to the production database.

By generating DML activity using a simulator, the demonstration shows how inserts, updates, and deletes are managed. Running DMLS operations against the orders table, the preview shows not only the data being captured, but also metadata including the transaction ID, the system commit number, the table name, and the operation type. When you log into the orders table, the data is present in the table.

The initial upload of data from the source to the target, followed by change data capture to ensure source and target remain in-sync, allows businesses to move data from on-premises databases into Google Cloud with the peace of mind that there will be no data loss and no interruption of mission-critical applications.

Additional Resources

To learn more about Striim’s capabilities to support the data integration requirements for a Google hybrid cloud architecture, check out all of Striim’s solutions for Google Cloud Platform.

To read more about real-time data integration, please visit our Real-Time Data Integration solutions page.

To learn more about how Striim can help you migrate Oracle database to Google Cloud, we invite you to schedule a demo with a Striim technologist.