Stream Data from PostgreSQL to Google BigQuery with Striim Cloud - Part 2

Use Striim Cloud to stream CDC data securely from PostgreSQL database into Google BigQuery

Benefits

Operational Analytics

Visualize real time data with Striim’s powerful Analytic Dashboard

Capture Data Updates in real time

Use Striim’s postgrescdc reader for real time data updates

Overview

In part 1 of PostgreSQL to Bigquery streaming, we have shown how data can be securely replicated between Postgres database and Bigquery. In this

recipe we will walk you through a Striim application capturing change data from postgres database and replicating to bigquery for real time visualization.

In addition to CDC connectors, Striim has hundreds of automated adapters for file-based data (logs, xml, csv), IoT data (OPCUA, MQTT), and applications such as Salesforce and SAP. Our SQL-based stream processing engine makes it easy to

enrich and normalize data before it’s written to Snowflake.

Traditionally Data warehouses that required data to be transferred use batch processing but with Striim’s streaming platform data can be replicated in real-time efficiently with added cost.

Data loses its value over time and businesses need to be updated with most recent data in order to make the right decisions that are vital to overall growth.

In this tutorial, we’ll walk you through how to create a replica slot to stream change data from postgres tables to bigquery and use the in-flght data to generate analytical dashboards.

Core Striim Components

PostgreSQL CDC: PostgreSQL Reader uses the wal2json plugin to read PostgreSQL change data. 1.x releases of wal2jon can not read transactions larger than 1 GB.

Stream: A stream passes one component’s output to one or more other components. For example, a simple flow that only writes to a file might have this sequence

Continuous Query : Striim Continuous queries are are continually running SQL queries that act on real-time data and may be used to filter, aggregate, join, enrich, and transform events.

Window: A window bounds real-time data by time, event count or both. A window is required for an application to aggregate or perform calculations on data, populate the dashboard, or send alerts when conditions deviate from normal parameters.

BigQueryWriter: Striim’s BigQueryWriter writes the data from various supported sources into Google’s BigQuery data warehouse to support real time data warehousing and reporting.

Step 1: Create a Replication Slot

For this recipe, we will host our app in Striim Cloud but there is always a free trial to visualize the power of Striim’s Change Data Capture.

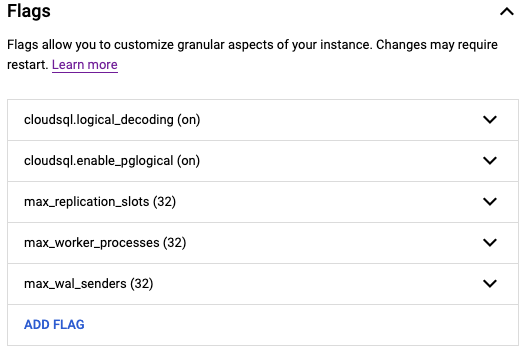

For CDC application on a postgres database, make sure the following flags are enabled for the postgres instance:

Create a user with replication attribute by running the following command on google cloud console:

CREATE USER replication_user WITH REPLICATION IN ROLE cloudsqlsuperuser LOGIN PASSWORD ‘yourpassword’;

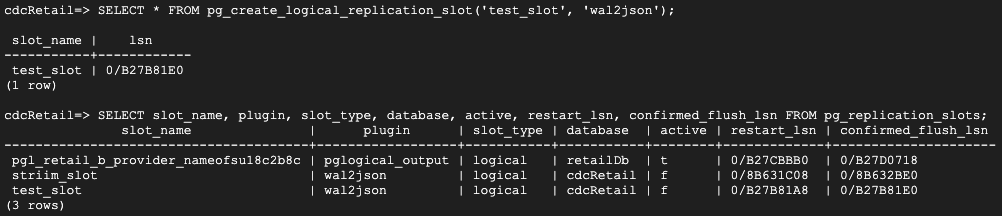

Follow the steps below to set up your replication slot for change data capture:

Create a logical slot with wal2json plugin.

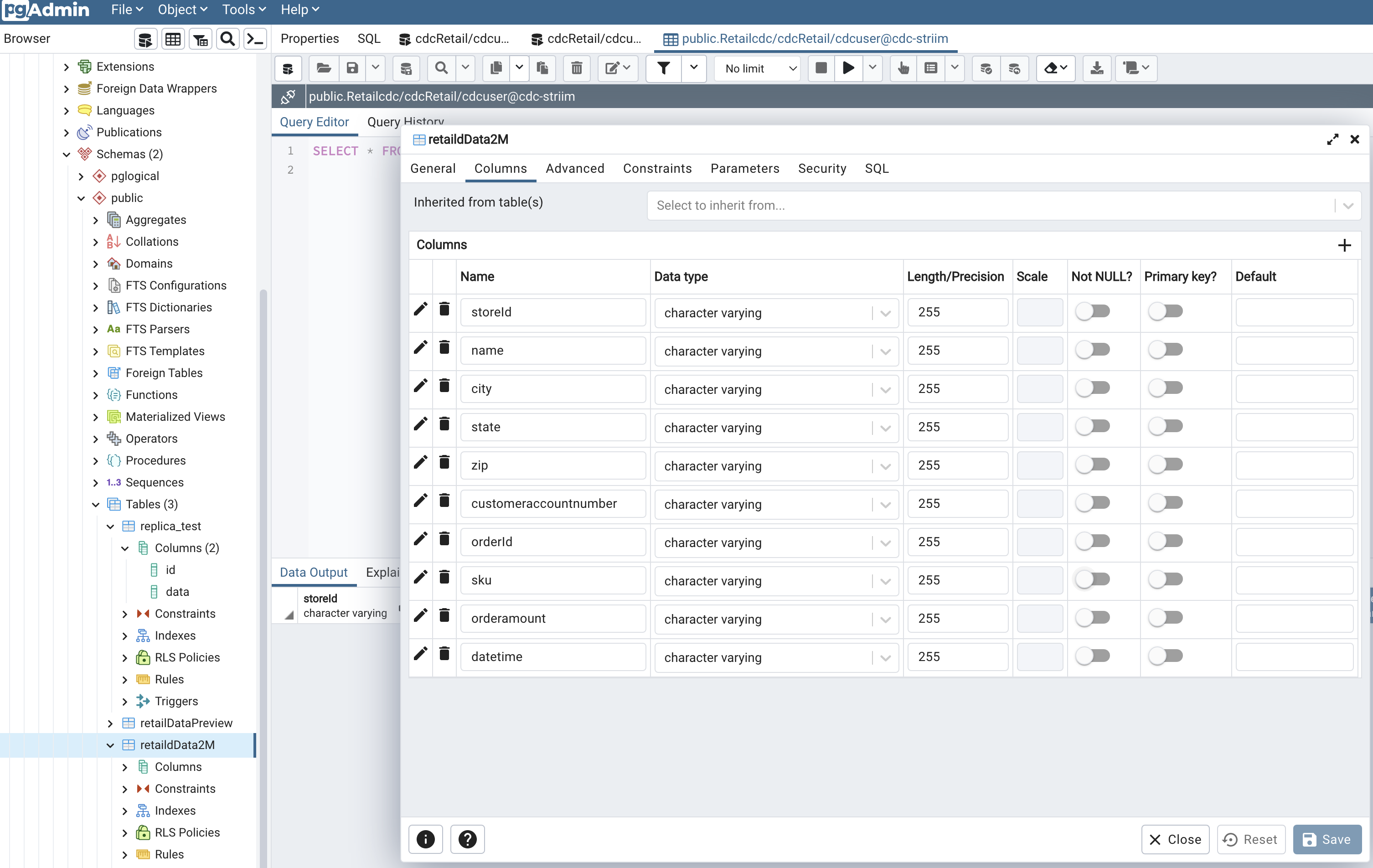

Create table that needs to be replicated for capturing changes in data. I have used PgAdmin, which is a UI for postgres database management system to create my table and insert data into it.

Step 2: Configure CDC app on Striim Server

Follow the steps described in part 1 for creating an app from scratch. You can find the TQL file for this app in our git repository.

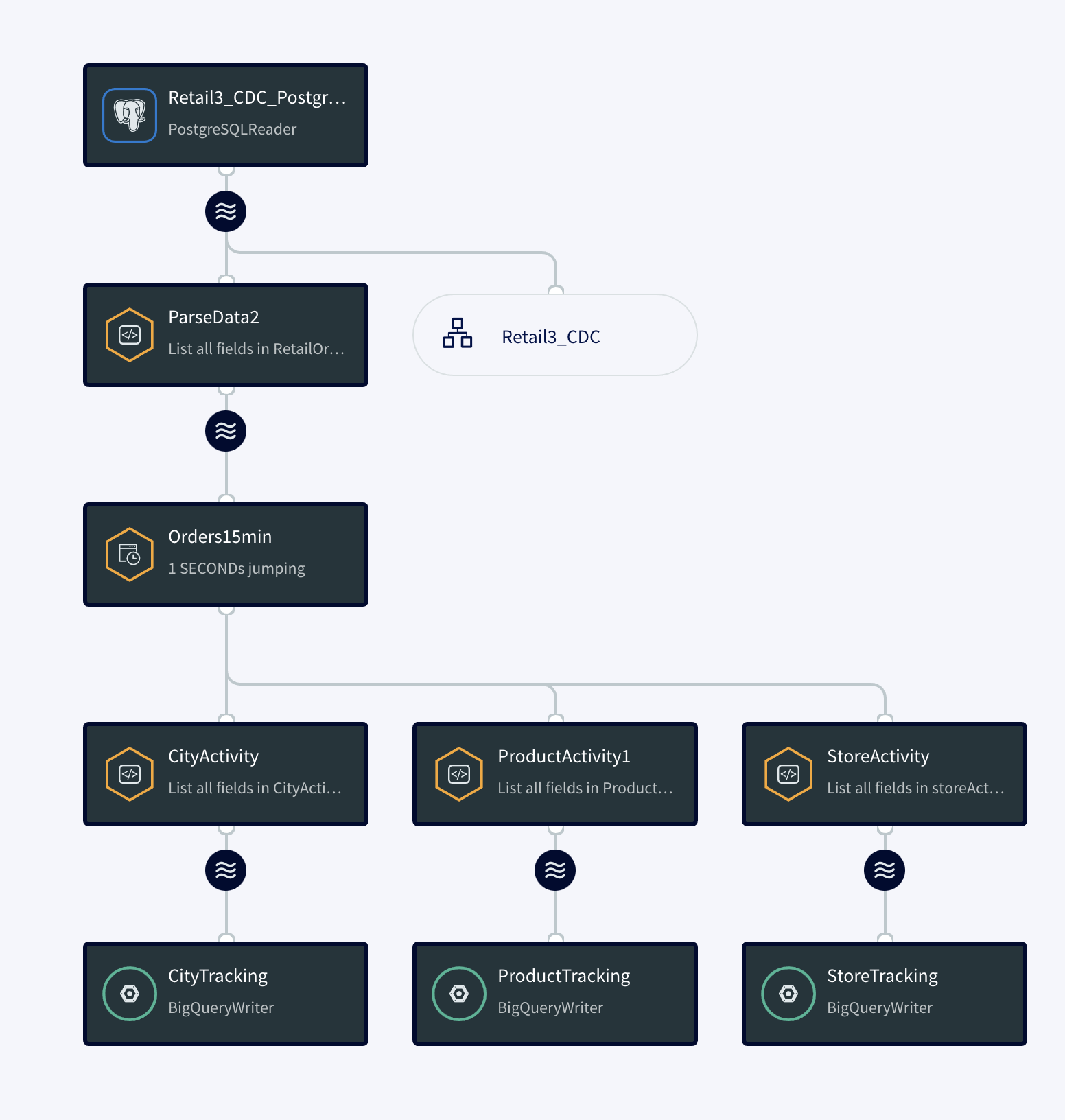

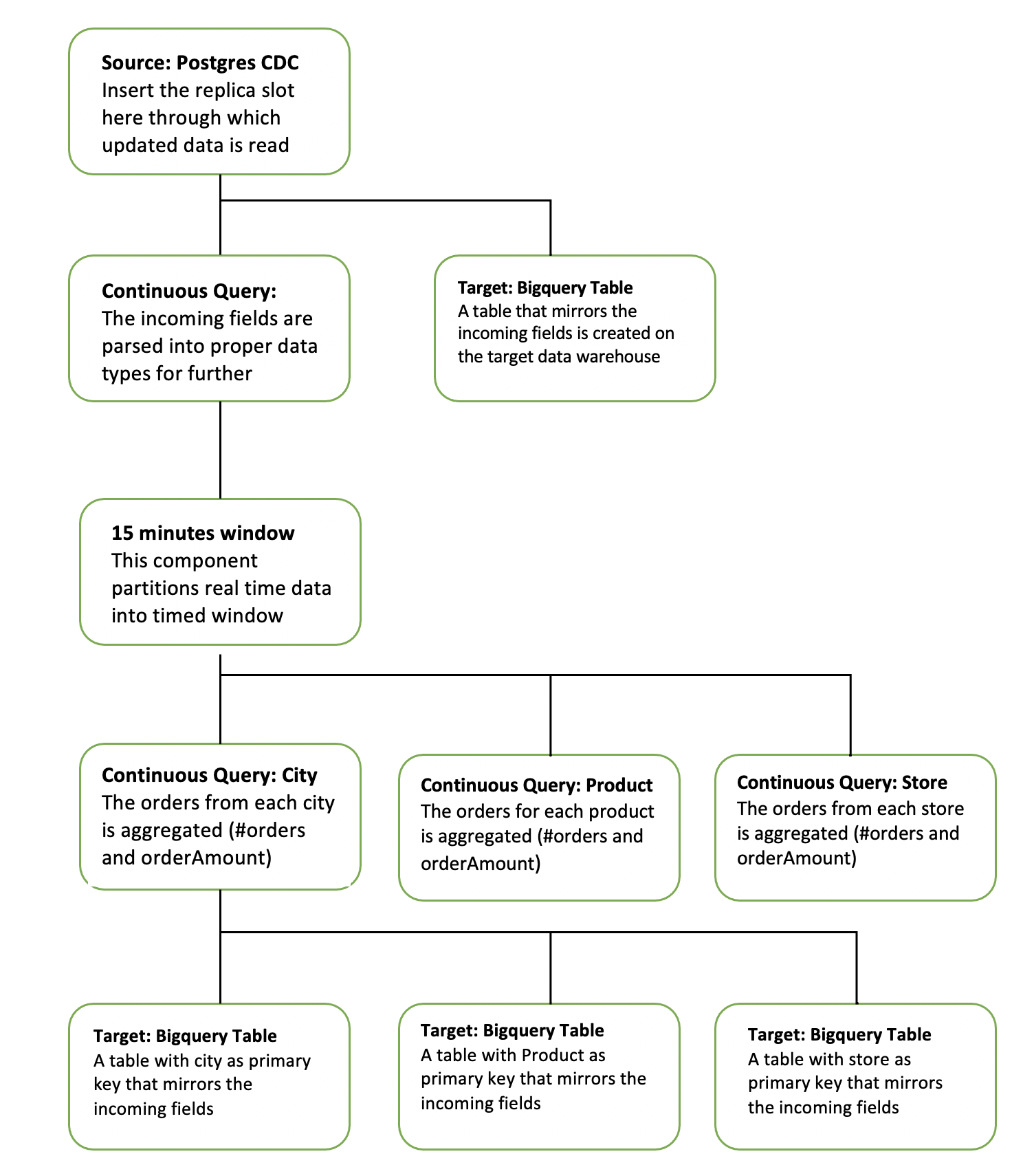

The diagram below simplifies each component of the app.

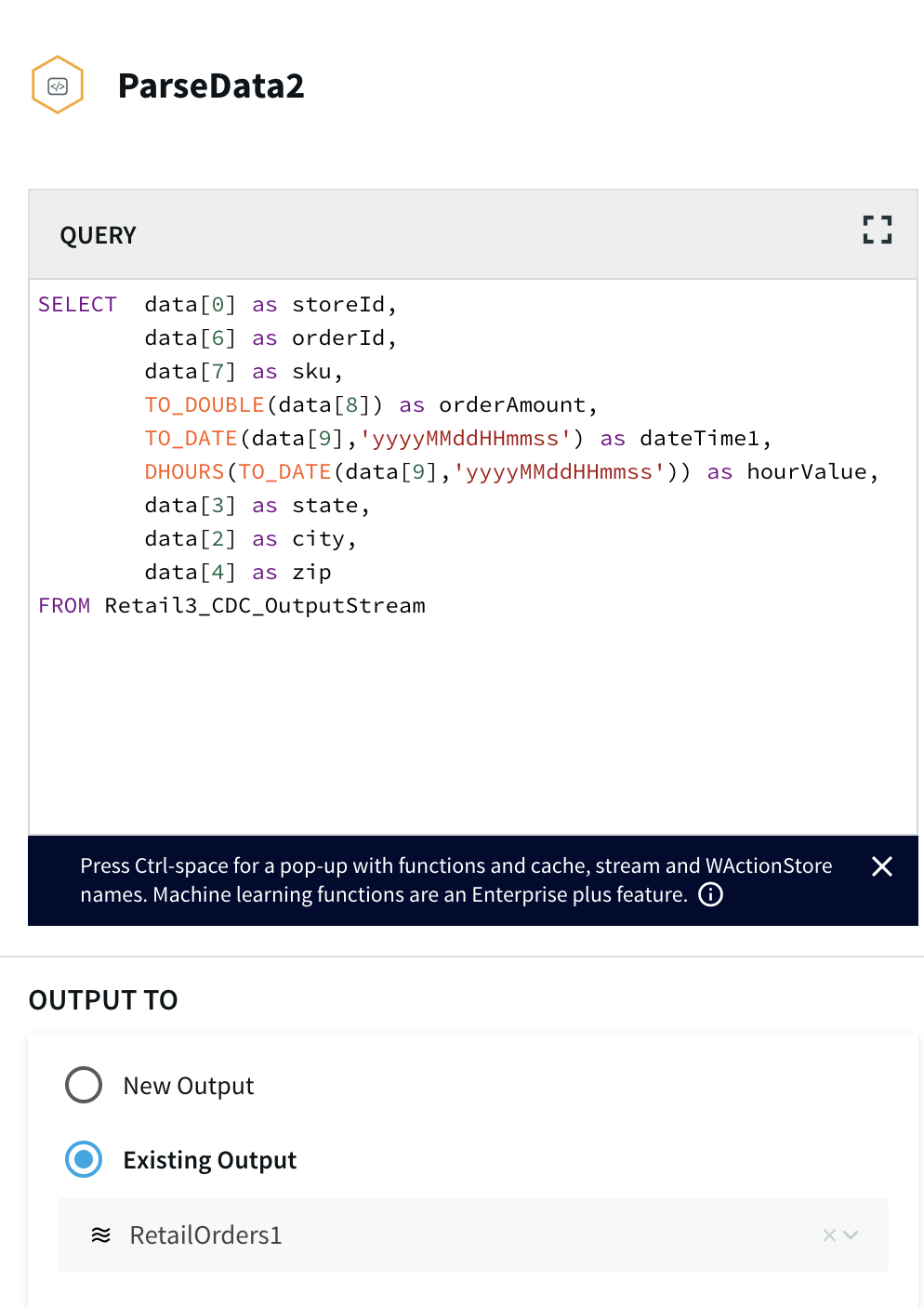

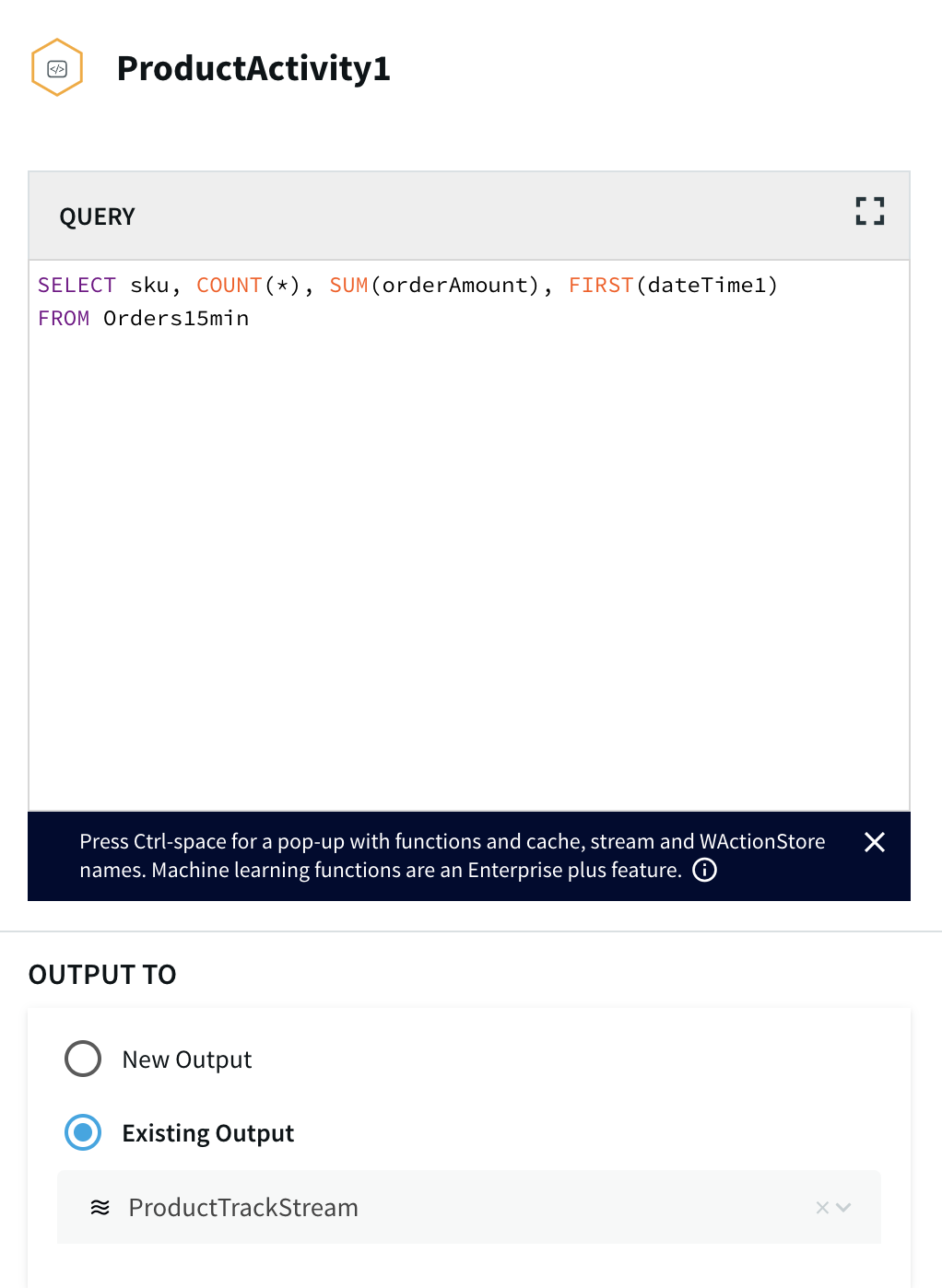

The continuous query is a sql-based query that is used to query the database.The following queries are for ParseData2 where data is transformed into proper data type for further processing and ProductActivity1 where product data is aggregated to derive useful insights about each product.

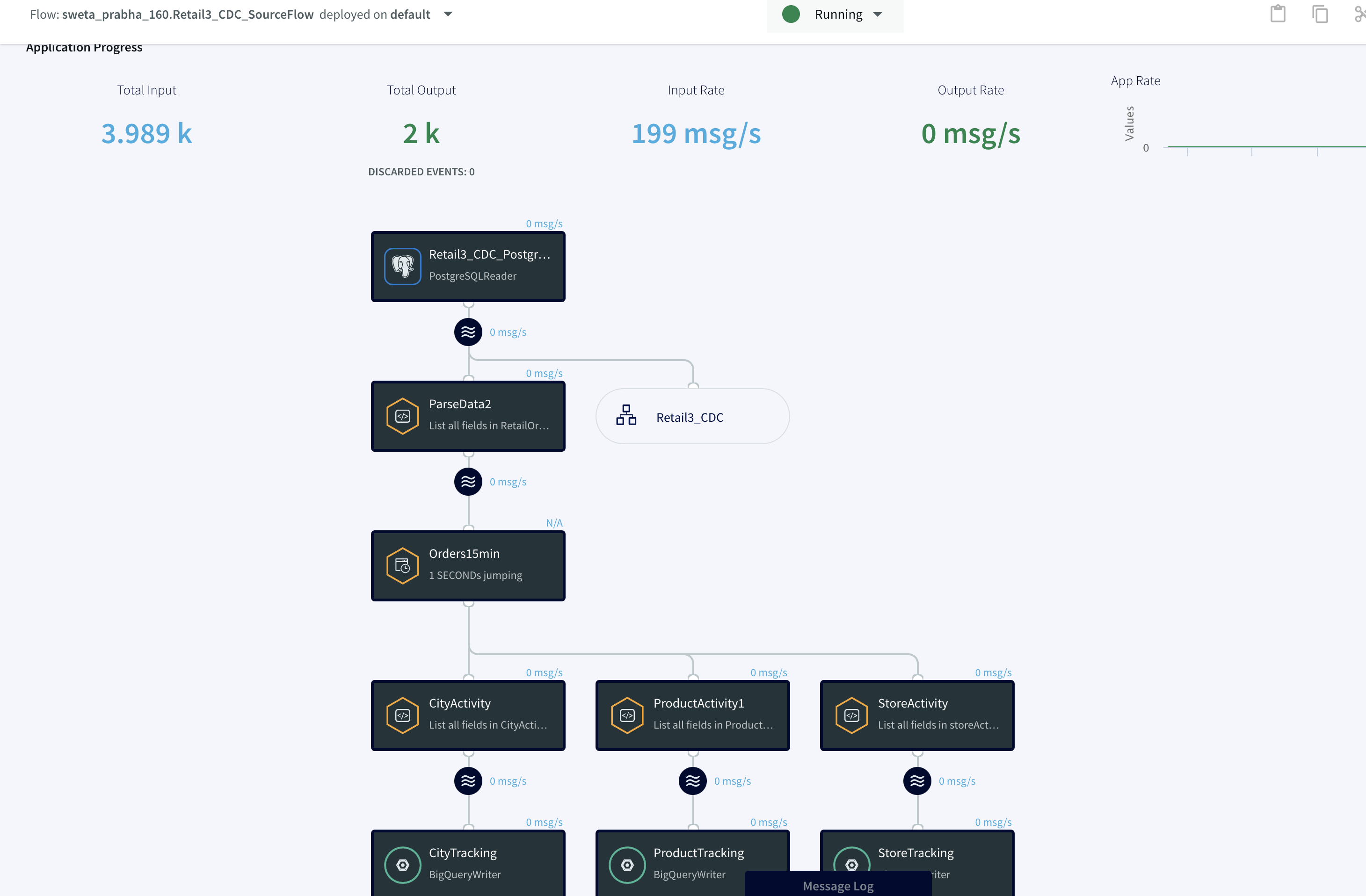

Step 3: Deploy and Run the Striim app for Fast Data Streaming

In this step you will deploy and run the final app to visualize the power of Change Data Capture in Striim’s next generation technology.

Setting Up the Postgres to BigQuery Streaming Application

Step 1: Follow this recipe to create a Replication Slot and user for Change Data Capture

The replication user reads change data from your source database and replicates it to the target in real-time.

Step 2: Download the dataset and TQL file from our github repo and set up your Postgres Source and BigQuery Target.

You can find the csv dataset in our github repo. Set up your BigQuery dataset and table that will act as a target for the streaming application

Step 3: Configure source and target components in the app

Configure the source and target components in the striim app. Please follow the detailed steps from our recipe.

Deploy and run real-time data streaming app

Wrapping Up: Start Your Free Trial

Our tutorial showed you how easy it is to capture change data from PostgreSQL to Google BigQuery, a leading cloud data warehouse. By constantly moving your data into BigQuery, you could now start building analytics or machine learning models on top, all

with minimal impact to your current systems. You could also start ingesting and normalizing more datasets with Striim to fully take advantage of your data when combined with the power of BigQuery..

As always, feel free to reach out to our integration experts to schedule a demo, or try Striim for free here.

Tools you need

Striim

Striim’s unified data integration and streaming platform connects clouds, data and applications.

PostgreSQL

PostgreSQL is an open-source relational database management system.

Google BigQuery

BigQuery is a serverless, highly scalable multicloud data warehouse.