A well-executed data pipeline can make or break your company’s ability to leverage real-time insights and stay competitive. Thriving in today’s world requires building modern data pipelines that make moving data and extracting valuable insights quick and simple.

Today, we’ll answer the question, “What is a data pipeline?” Then, we’ll explore a data pipeline example and dive deeper into the key differences between a traditional data pipeline vs ETL.

What is a Data Pipeline?

A data pipeline refers to a series of processes that transport data from one or more sources to a destination, such as a data warehouse, database, or application. These pipelines are essential for managing and optimizing the flow of data, ensuring it’s prepared and formatted for specific uses, such as analytics, reporting, or machine learning.

Throughout the pipeline, data undergoes various transformations such as filtering, cleaning, aggregating, enriching, and even real-time analysis. These steps guarantee that data is accurate, reliable, and meaningful by the time it reaches its destination, making it possible for teams to generate insights and make data-driven decisions.

In addition to the individual steps of a pipeline, data pipeline architecture refers to how the pipeline is designed to collect, flow, and deliver data effectively. This architecture can vary based on the needs of the organization and the type of data being processed. There are two primary approaches to moving data through a pipeline:

- Batch processing: In batch processing, batches of data are moved from sources to targets on a one-time or regularly scheduled basis. Batch processing is the tried-and-true legacy approach to moving data, but it doesn’t allow for real-time analysis and insights, which is its primary shortcoming.

- Stream processing: Stream processing enables real-time data movement by continuously collecting and processing data as it flows, which is crucial for applications needing immediate insights like monitoring or fraud detection. Change Data Capture (CDC) plays a key role here by capturing and streaming only the changes (inserts, updates, deletes) in real time, ensuring efficient data handling and up-to-date information across systems. As a result, stream processing makes real-time business intelligence feasible.

Why are Data Pipelines Significant?

Now that we’ve answered the question, ‘What is a data pipeline?’ We can dive deeper into the essential role they play. Data pipelines are significant to businesses because they:

- Consolidate Data: Data pipelines are responsible for integrating and unifying data from diverse sources and formats, making it consistent and usable for analytics and business intelligence.

- Enhance Accessibility: Thanks to data pipelines, you can provide team members with necessary data without granting direct access to sensitive production systems.

- Support Decision-Making: When you ensure that clean, integrated data is readily available, you facilitate informed decision-making and boost operational efficiency.

What is a Data Pipeline Example?

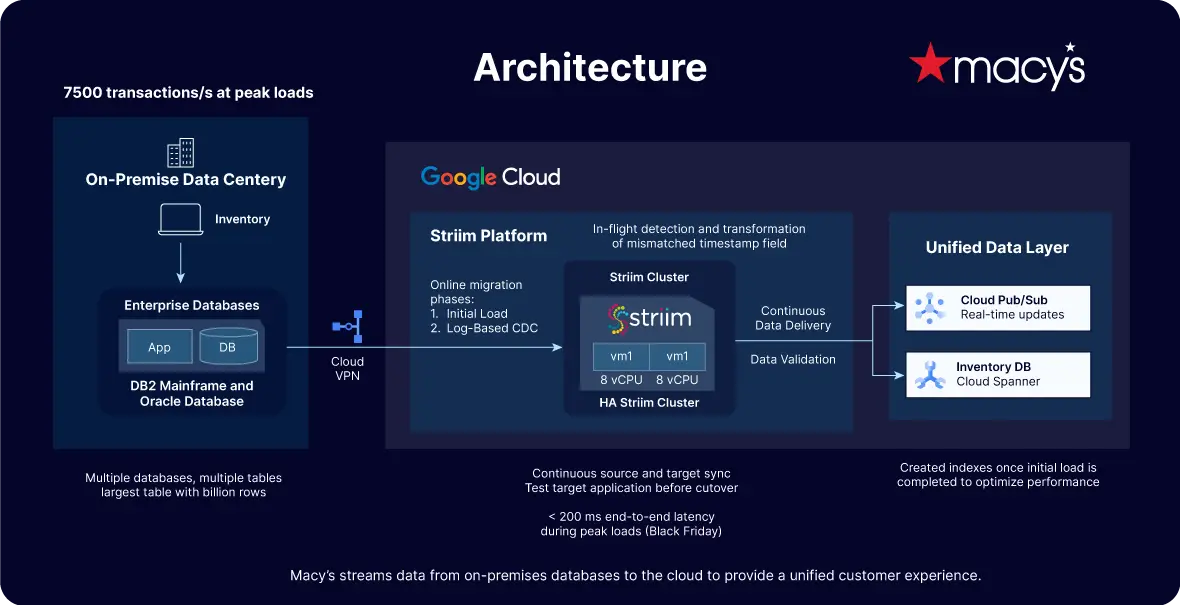

As you’ll see by taking a look at this data pipeline example, the complexity and design of a pipeline varies depending on intended use. For instance, Macy’s streams change data from on-premises databases to Google Cloud. As a result, customers enjoy a unified experience whether they’re shopping in a brick and mortar store or online.

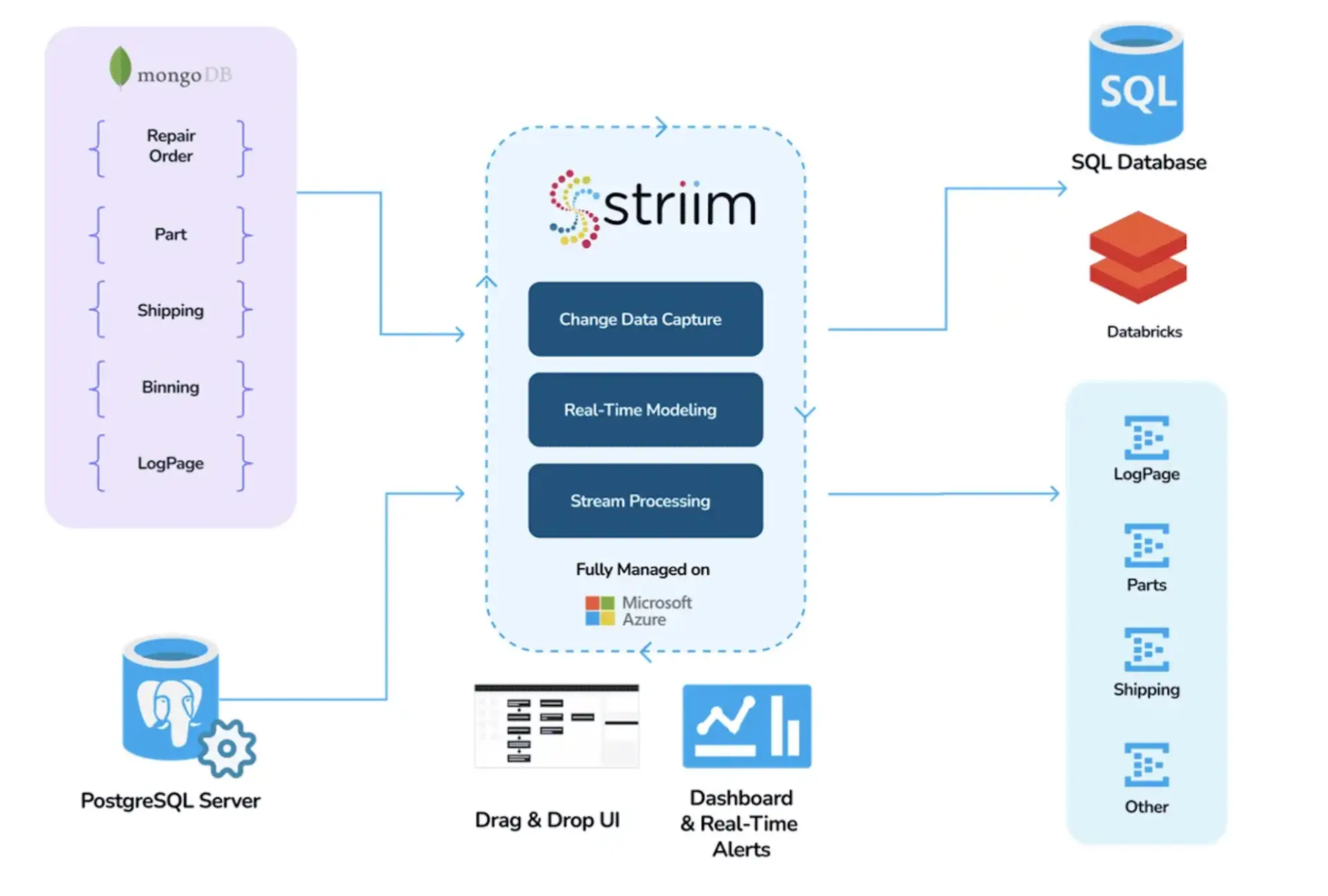

Another excellent data pipeline example is American Airlines’ work with Striim. Striim supported American Airlines by implementing a comprehensive data pipeline solution to modernize and accelerate operations.

To achieve this, the TechOps team implemented a real-time data hub using MongoDB, Striim, Azure, and Databricks to maintain seamless, large-scale operations. This setup uses change data capture from MongoDB to capture operational data in real time, then processes and models it for downstream systems. The data is streamed in real time to end users, delivering valuable insights to TechOps and business teams, allowing them to monitor and act on operational data to enhance the customer travel experience.

This data pipeline diagram illustrates how it works:

Data Pipeline vs ETL: What’s the Difference?

You’re likely familiar with the term ‘ETL data pipeline’ and may be curious to learn the difference between a traditional data pipeline vs ETL. In actuality, ETL pipelines are simply a form of data pipeline. To understand an ETL data pipeline fully, it’s imperative to understand the process that it entails.

ETL stands for Extract, Transform, Load. This process involves:

- Extraction: Data is extracted from a source or multiple sources.

- Transformation: Data is processed and converted into the appropriate format for the target destination — often a data warehouse or lake.

- Loading: The loading phase involves transferring the transformed data into the target system where your team can access it for analysis. It’s now usable for various use cases, including for reporting, insights, and decision-making.

A traditional ETL data pipeline typically involves disk-based processing, which can lead to slower transformation times. This approach is suitable for batch processing where data is processed at scheduled intervals, but may not meet the needs of real-time data demands.

While legacy ETL has a slow transformation step, modern ETL platforms, like Striim, have evolved to replace disk-based processing with in-memory processing. This advancement allows for real-time data transformation, enrichment, and analysis, providing faster and more efficient data processing. Striim, for example, handles data in near real-time, enabling quicker insights and more agile decision-making.

Now, let’s dive into the seven must-have features of modern data pipelines.

7 Must-Have Features of Modern Data Pipelines

To create an effective modern data pipeline, incorporating these seven key features is essential. Though not an exhaustive list, these elements are crucial for helping your team make faster and more informed business decisions.

1. Real-Time Data Processing and Analytics

The number one requirement of a successful data pipeline is its ability to load, transform, and analyze data in near real time. This enables business to quickly act on insights. To begin, it’s essential that data is ingested without delay from multiple sources. These sources may range from databases, IoT devices, messaging systems, and log files. For databases, log-based Change Data Capture (CDC) is the gold standard for producing a stream of real-time data.

Real-time, continuous data processing is superior to batch-based processing because the latter takes hours or even days to extract and transfer information. Because of this significant processing delay, businesses are unable to make timely decisions, as data is outdated by the time it’s finally transferred to the target. This can result in major consequences. For example, a lucrative social media trend may rise, peak, and fade before a company can spot it, or a security threat might be spotted too late, allowing malicious actors to execute on their plans.

Real-time data pipelines equip business leaders with the knowledge necessary to make data-fueled decisions. Whether you’re in the healthcare industry or logistics, being data-driven is equally important. Here’s an example: Suppose your fleet management business uses batch processing to analyze vehicle data. The delay between data collection and processing means you only see updates every few hours, leading to slow responses to issues like engine failures or route inefficiencies. With real-time data processing, you can monitor vehicle performance and receive instant alerts, allowing for immediate action and improving overall fleet efficiency.

2. Scalable Cloud-Based Architecture

Modern data pipelines rely on scalable, cloud-based architecture to handle varying workloads efficiently. Unlike traditional pipelines, which struggle with parallel processing and fixed resources, cloud-based pipelines leverage the flexibility of the cloud to automatically scale compute and storage resources up or down based on demand.

In this architecture, compute resources are distributed across independent clusters, which can grow both in number and size quickly and infinitely while maintaining access to a shared dataset. This setup allows for predictable data processing times as additional resources can be provisioned instantly to accommodate spikes in data volume.

Cloud-based data pipelines offer agility and elasticity, enabling businesses to adapt to trends without extensive planning. For example, a company anticipating a summer sales surge can rapidly increase processing power to handle the increased data load, ensuring timely insights and operational efficiency. Without such elasticity, businesses would struggle to respond swiftly to changing trends and data demands.

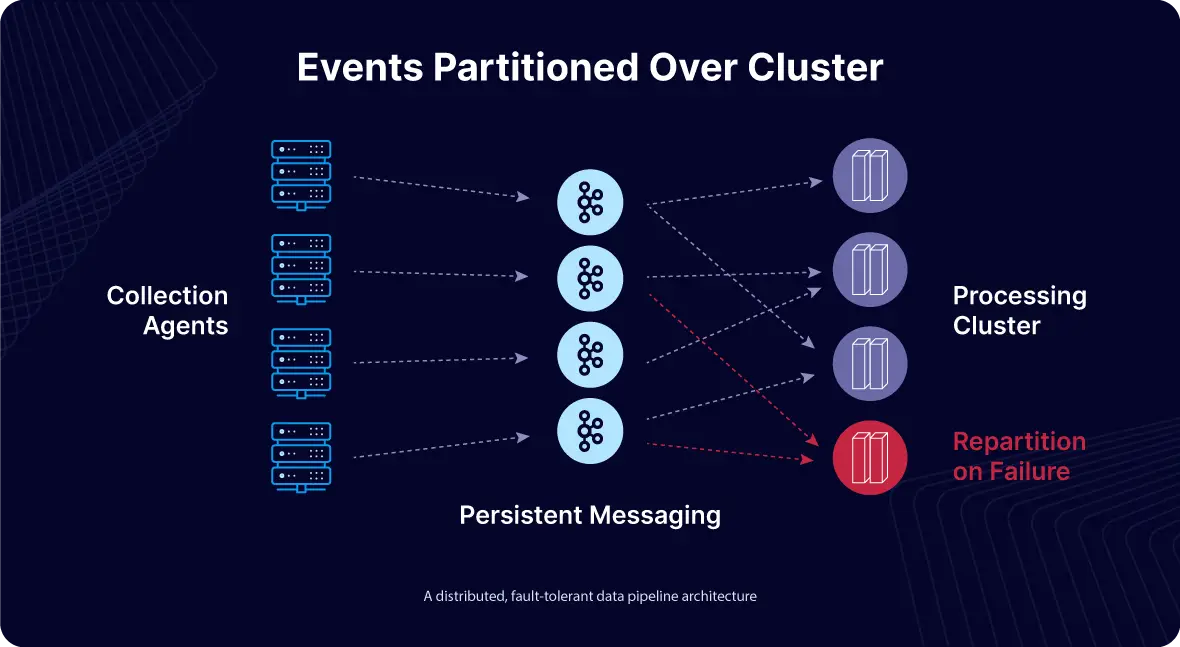

3. Fault-Tolerant Architecture

It’s possible for data pipeline failure to occur while information is in transit. Thankfully, modern pipelines are designed to mitigate these risks and ensure high reliability. Today’s data pipelines feature a distributed architecture that offers immediate failover and robust alerts for node, application, and service failures. Because of this, we consider fault-tolerant architecture a must-have.

In a fault-tolerant setup, if one node fails, another node within the cluster seamlessly takes over, ensuring continuous operation without major disruptions. This distributed approach enhances the overall reliability and availability of data pipelines, minimizing the impact on mission-critical processes.

4. Exactly-Once Processing (E1P)

Data loss and duplication are critical issues in data pipelines that need to be addressed for reliable data processing. Modern pipelines incorporate Exactly-Once Processing (E1P) to ensure data integrity. This involves advanced checkpointing mechanisms that precisely track the status of events as they move through the pipeline.

Checkpointing records the processing progress and coordinates with data replay features from many data sources, enabling the pipeline to rewind and resume from the correct point in case of failures. For sources without native data replay capabilities, persistent messaging systems within the pipeline facilitate data replay and checkpointing, ensuring each event is processed exactly once. This technical approach is essential for maintaining data consistency and accuracy across the pipeline.

5. Self-Service Management

Modern data pipelines facilitate seamless integration between a wide range of tools, including data integration platforms, data warehouses, data lakes, and programming languages. This interconnected approach enables teams to create, manage, and automate data pipelines with ease and minimal intervention.

In contrast, traditional data pipelines often require significant manual effort to integrate various external tools for data ingestion, transfer, and analysis. This complexity can lead to bottlenecks when building the pipelines, as well as extended maintenance time. Additionally, legacy systems frequently struggle with diverse data types, such as structured, semi-structured, and unstructured data.

Contemporary pipelines simplify data management by supporting a wide array of data formats and automating many processes. This reduces the need for extensive in-house resources and enables businesses to more effectively leverage data with less effort.

6. Capable of Processing High Volumes of Data in Various Formats

It’s predicted that the world will generate 181 zettabytes of data by 2025. To get a better understanding of how tremendous that is, consider this — one zettabyte alone is equal to about 1 trillion gigabytes.

Since unstructured and semi-structured data account for 80% of the data collected by companies, modern data pipelines need to be capable of efficiently processing these diverse data types. This includes handling semi-structured formats such as JSON, HTML, and XML, as well as unstructured data like log files, sensor data, and weather data.

A robust big data pipeline must be adept at moving and unifying data from various sources, including applications, sensors, databases, and log files. The pipeline should support near-real-time processing, which involves standardizing, cleaning, enriching, filtering, and aggregating data. This ensures that disparate data sources are integrated and transformed into a cohesive format for accurate analysis and actionable insights.

7. Prioritizes Efficient Data Pipeline Development

Modern data pipelines are crafted with DataOps principles, which integrate diverse technologies and processes to accelerate development and delivery cycles. DataOps focuses on automating the entire lifecycle of data pipelines, ensuring timely data delivery to stakeholders.

By streamlining pipeline development and deployment, organizations can more easily adapt to new data sources and scale their pipelines as needed. Testing becomes more straightforward as pipelines are developed in the cloud, allowing engineers to quickly create test scenarios that mirror existing environments. This allows thorough testing and adjustments before final deployment, optimizing the efficiency of data pipeline development.

Gain a Competitive Edge with Striim

Data pipelines are crucial for moving, transforming, and storing data, helping organizations gain key insights. Modernizing these pipelines is essential to handle increasing data complexity and size, ultimately enabling faster and better decision-making.

Striim provides a robust streaming data pipeline solution with integration across hundreds of sources and targets, including databases, message queues, log files, data lakes, and IoT. Plus, our platform features scalable in-memory streaming SQL for real-time data processing and analysis. Schedule a demo for a personalized walkthrough to experience Striim.